Do you develop, manage or use AI tools? Here’s a short AI Security brief.

It can be tricky to talk about Artificial Intelligence (AI) Security because people can interpret the term in different ways. Some assume it means the use of AI to enhance cybersecurity, or the impact of emerging AI technologies on society or national security.

These are important topics, but don’t describe what AI security is:

AI Security is the practice of protecting our AI systems from external threats and attacks

AI Security is important for any organisation that develops or uses AI, yet AI security awareness is lagging. In fact, a recent report found that 77% of companies reported that they identified breaches to their AI in the past year.

This brief contains the key ideas of AI Security to help you start discussing and asking the right questions to help secure your AI.

(A short) what is AI?

A system that can generate outputs to achieve human-defined objectives without explicit programming or human direction.

This includes chatbots, language translation, document readers, social media recommendation systems, data processing for predictions or recommendations and more. AI is everywhere which makes responsible, safe and secure AI practises so important.

What is AI Security?

AI Security is the practice of protecting AI and systems that use AI from being attacked.

You might also see this field referred to as AI Cybersecurity, Cybersecurity for AI, AI Security Operations or Machine Learning Security Operations. AI Security is different from using AI for cyber security or assessing the impact of new AI technologies on security.

AI systems have unique vulnerabilities that need to be considered in addition to standard cybersecurity threats.

The stochastic nature of AI and AI-enabled systems introduces weaknesses that traditional deterministic, software systems don’t have. As a result, AI security practices have to address these new vulnerabilities while upholding existing cybersecurity measures.

What do I need to know about AI attacks?

AI systems are vulnerable to standard cybersecurity threats as well as vulnerable to a category of AI-specific attacks developed under the field of Adversarial Machine Learning (AML). AML studies the offensive side of AI security and started as an academic discipline over two decades ago.

Compromised AI systems generally occur because either:

- Data professionals don’t have sufficient cybersecurity support. As data professionals aren’t often also cybersecurity experts, they may have blindspots to important best practices such as setting correct authentication and being aware of software supply chain vulnerabilities.

- Cybersecurity professionals overlook AI-specific attacks (AML) that are less well known and are still in the process of being incorporated in cybersecurity processes.

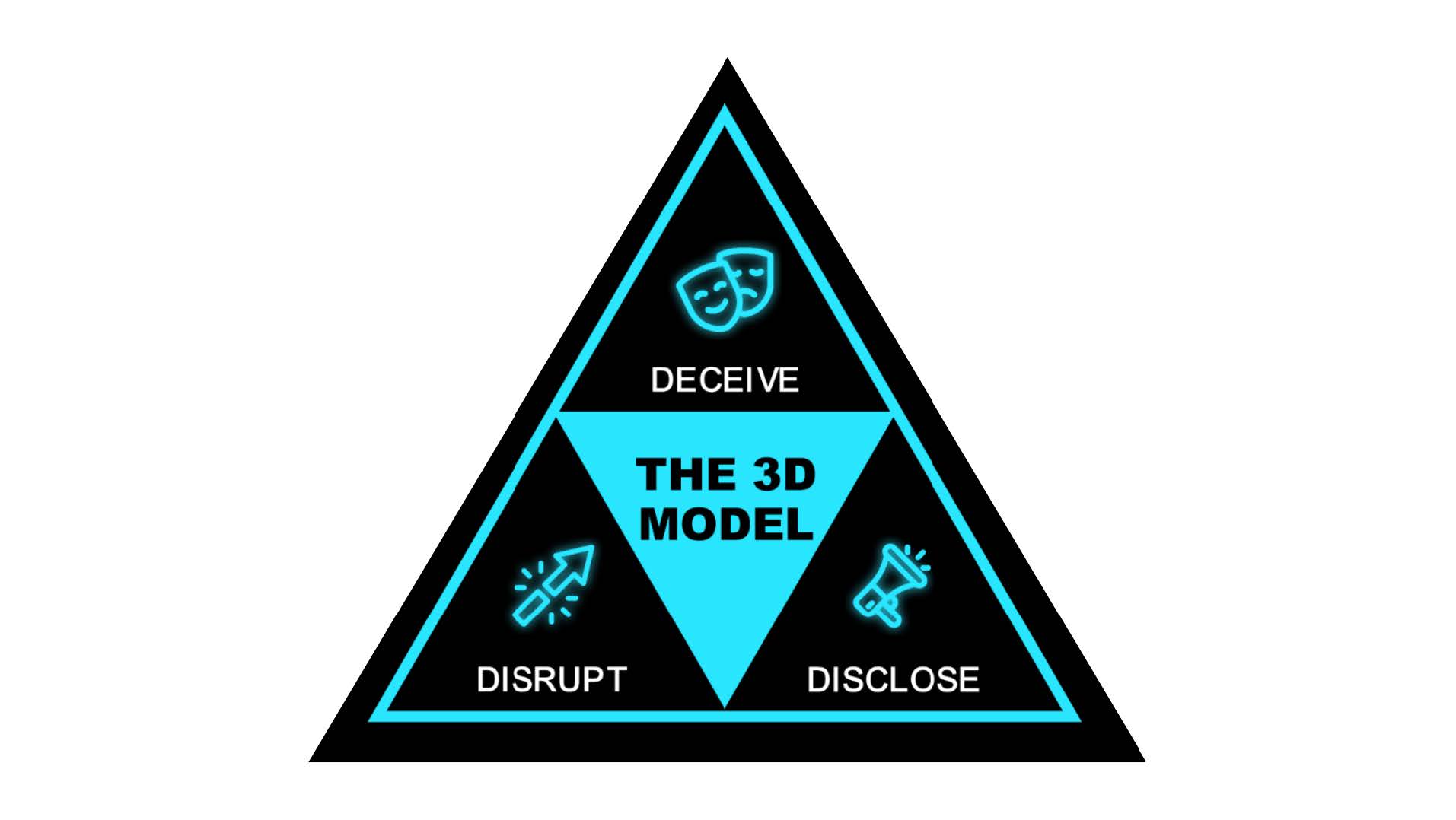

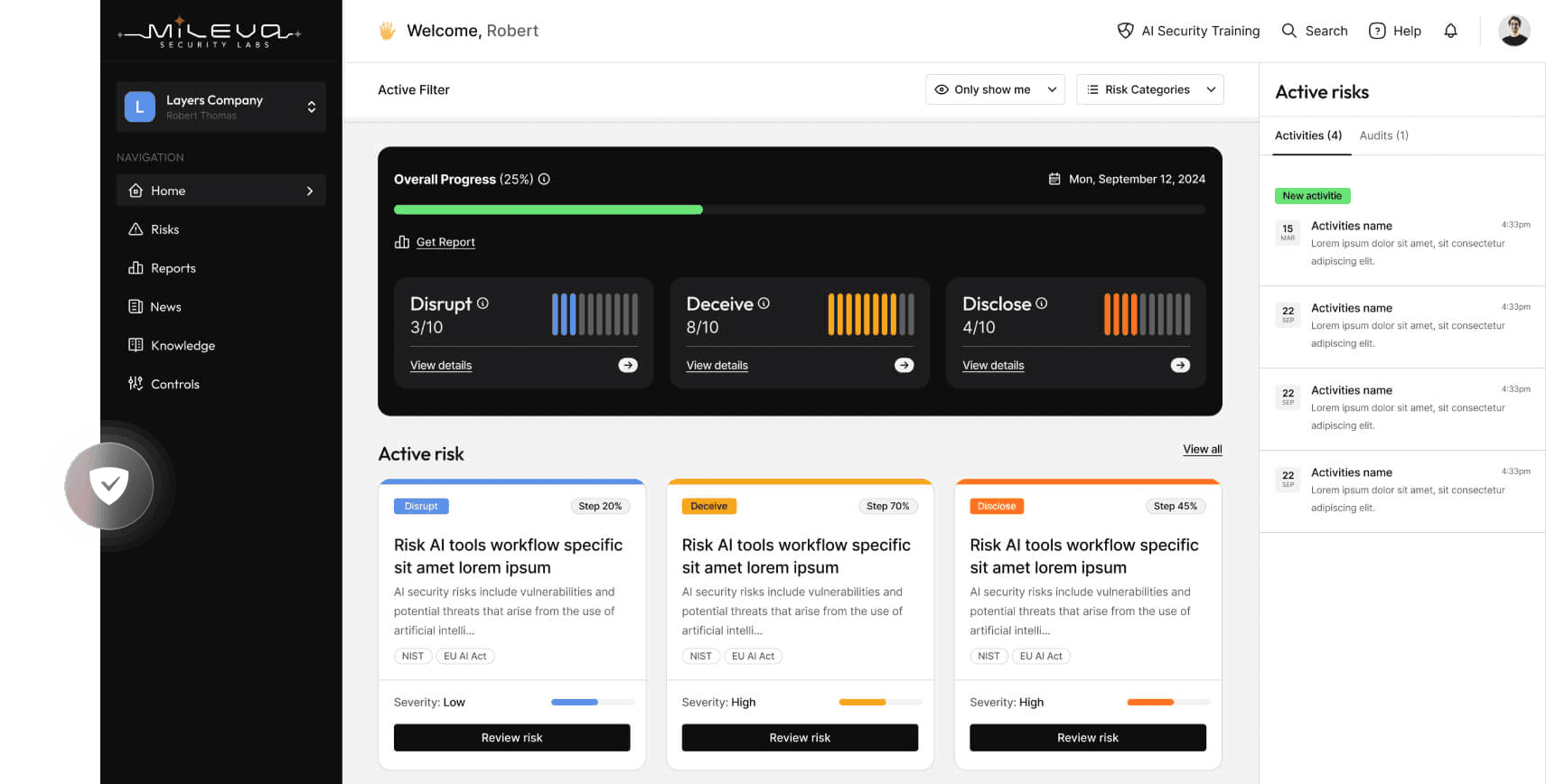

There are models that have been recently released by organisations to communicate these AI-specific attacks, however, currently existing ones are too low-level or are vendor specific. At Mileva, we have developed the 3D model to concisely communicate the consequences of AI security attacks and help AI businesses manage and understand their AI security risk.

What are the challenges in AI Security?

Surveys show that organisations on average are lacking AI security. Why is this the case? In our experience, and supported by research, this relates to 3 challenges.

1. Lagging awareness of AI.

The general level of AI security literacy in practitioners is not keeping pace with the rate of AI adoption. In 2020, over 90% of company leaders say their business uses AI but only 14% of them implement AI security.

At Mileva, we see the importance of raising the level of awareness of both cyber security for AI professionals and AI-specific weaknesses for cyber professionals.

2. Fast rate of change in AI technologies and security make it difficult for time-limited users to keep up.

The field is still in the process of maturing and standardisation of security testing and vulnerability disclosure practices. The diversity of AI technologies and applications makes it hard to develop AI Security tools and processes that are generalisable while remaining specific enough to be useful. We hope this will change as the profile of AI security and its importance grows and legislation gets passed mandating AI security practices be put in pace.

In the meantime, one of the best ways to address AI security is to develop bespoke AI security functions, which is what the likes of Microsoft, NVIDIA and Google are doing. However, we recognise that not all organisations can dedicate resources to do so.

3. Interdisciplinary nature of AI Security.

AI Security includes elements of data science, computer science, AI systems and cybersecurity operations. To develop new tools or techniques requires a deep understanding and collaboration between all of these broad disciplines.

Our mission at Mileva is to tackle these challenges as we believe in the importance of AI security.

The current (and future) state of AI Security threats and responses.

Databases of AI incidents and knowledge of vulnerabilities that target AI tooling continue to grow as more attacks get reported on, discovered and reported on. The need for AI security will continue to grow as:

- The incentive to attack AI grows as AI continues to get integrated into high-value systems.

- More methods of attacking AI systems get researched and developed.

At the same time, many different AI security tools and practices are being developed. Big tech companies have developed and published tools, taxonomies and processes. Regulating bodies who have released AI security policy products (such as the US’ National Institute of Standards and Technology, the UK’s National Cyber Security Centre, the EU’s AI Office and more).

If I can leave you with one parting thought, I hope that its; AI Security is important to anyone using or developing AI. Securing AI systems begins with understanding the possibility and consequences of AI attacks. If your organisation uses or develops AI, start those important AI Security discussions - Is security a consideration through design, development, deployment and operation of AI systems? What things are currently in place to reduce the risk of attacks that exploit AI weaknesses?

There is only so much we can cover in a short brief. Mileva provides advisory services and training to help executives and practitioners understand how to implement AI security solutions. Please reach out to get started on your AI Security journey or see our free 30 minute primer into AI Security.