Intro to AI Security Part 1: AI Security 101

For at least two years now I have been complaining about the lack of Artificial Intelligence (AI) Security resources. I complain about many things without intending to do anything about it, but I realised that this is probably one mantle I should take up. I know a bit about AI Security, and believe I have a unique lens that I hope others might find valuable. Plus my friends are sick of me talking at them about AI Security and suggested I find another outlet, so this is an investment in their sanity.

All jokes aside, I have two primary purposes in writing these blogs:

- To prevent AI Security from superseding cyber security as one of the world’s greatest technological and geostrategic threats,

- To help a new generation of hackers (or literally anyone!) learn about what I believe is one of the coolest and most impactful fields of the century.

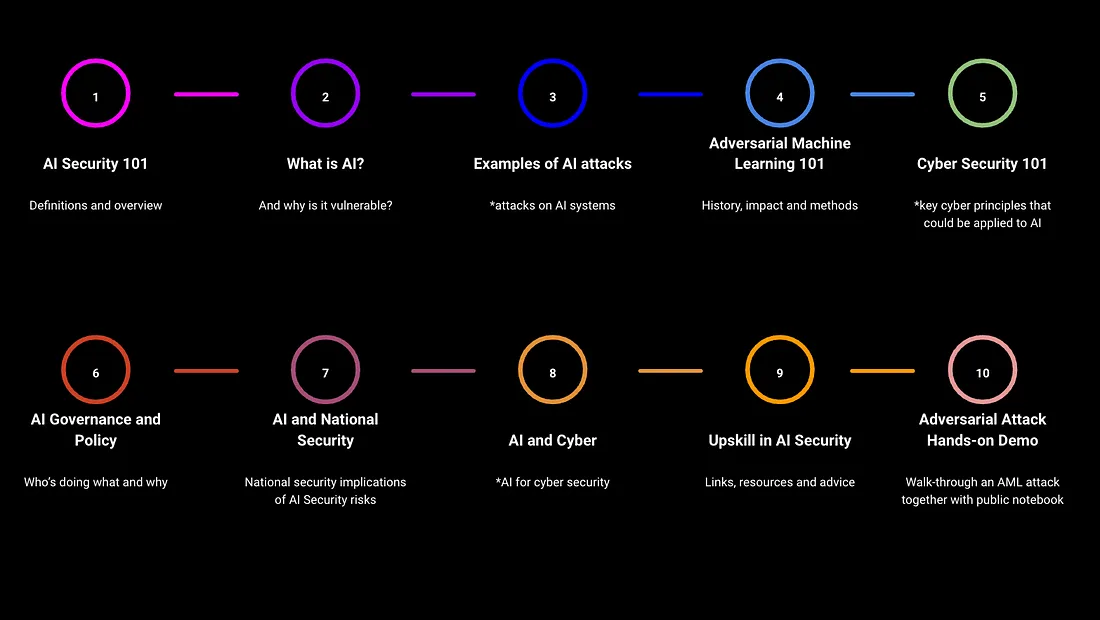

This is the first in an ‘Intro to AI Security’ blog series that will demystify AI Security and its intersection with other fields like cyber security and national security, and is designed to be accessible for people from any background. Following this will be a ‘Deep Dive’ series that will investigate the more technical concepts behind AI Security in greater detail. Each of these blogs will later be accompanied by video content for those of us who find it easier to learn by watching rather than reading. (And I apologise in advance for all the Dad jokes that will make their way into these vlogs).

I encourage you to reach out with other topics you would like me to cover, and do contribute to the discussion. AI Security is a rapidly growing field that will benefit from YOU. So please, get involved, whatever your skill set.

Now what is AI Security?

AI Risk, Responsible AI, AI Safety.. You have probably heard some of these terms before.

There are many names for the set of challenges in AI and they all refer to slightly different flavours of the AI threat. I personally prefer the term AI Security because it makes explicit the analogy to cyber and information security. However, other terms may be more appropriate depending on the kind of AI we are referring to and how it is used, as I’ll explain in more detail below.

AI Security — refers to the technical and governance considerations that pertain to hardening AI systems to exploitation and disruption.

Many people use the term ‘AI’ the same way you’d use the word ‘magic’. For example, you might see news headlines promising AI can control your dreams, let you live forever, or take over the world. These are all real articles by the way.

Many people don’t realise that although AI might contain the word ‘intelligence’, it is a very different form of intelligence to that of humans or animals. For instance, when humans do bad things, or are tricked into doing bad things, we don’t really know exactly which combination of factors caused us to do this. Was it our biology? Our culture? Environment? We can’t exactly draw a neat diagram of all the things that have gone awry and how to fix them. This is why therapy is so popular and profitable.

However, when it comes to computational systems, we have a pretty good idea of what we bolted together. Many of these building blocks are quite complex and have uncertainty built in, but we at least know where the uncertainty is (link to AI brain).

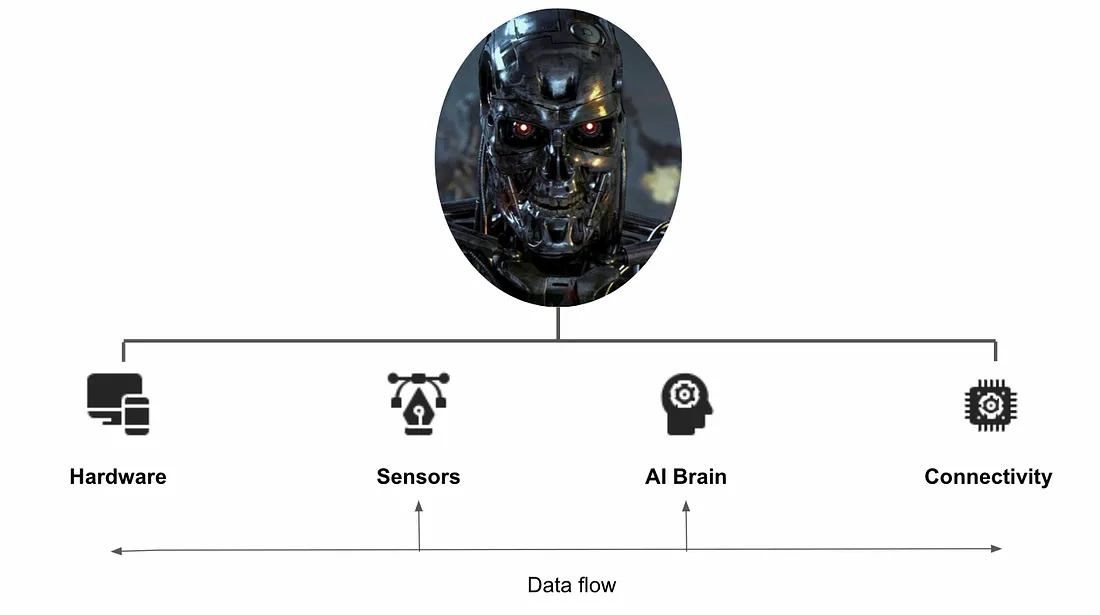

Meet Skynet. (Many of you would remember Skynet as the evil AI system from the terminator. I’m using Skynet as my example because by understanding how to hack Skynet, we can prevent it from recognising and killing Sarah Connor. In hindsight, though, I feel like I should have used a newer reference to be more relatable to #youth)

Skynet system is made up of:

- Hardware and circuits, to control and execute movement

- Sensors that capture data from the environment

- An AI brain, of: decision-based algorithms that manage simpler tasks, machine learning models that learn from data and make predictions for complex tasks, computer that controls the hardware, and

- Connective fibres to send and receive information within the system, and with others systems (like GPS for navigation, and data to/from the cloud)

Why do I switch from ‘Artificial Intelligence’ to ‘Machine Learning’ when discussing ‘Adversarial Machine Learning’?

The difference between Artificial Intelligence and Machine Learning is the subject of much debate at after-work drinks (what else do your colleagues talk about?) and also depends on which circle of people you’re talking to.

Until recently, I had always believed that ‘Machine Learning is in Python, Artificial Intelligence is in PowerPoint’. (Python is a programming language). In general, practitioners have preferred the term Machine Learning because it refers to specific techniques for building models, while AI was more of a business term that described how ML models might be used, and sounds better in science fiction movies.

However something has changed in the last couple of years, specifically the burgeoning range of methods that can be used to create and use models that are broader than the techniques used within the field of machine learning. The International Standards Organisation (ISO), the leading technical standards body and reference for cyber and information security standards, gave AI a technical definition this year that is broad but still practical enough to be used beyond nice PowerPoint slides.

AI is the capability to acquire, process, create and apply knowledge, held in the form of a model, to conduct one or more given tasks. — ISO/IEC 2382–28:1995(en)

This is why I reluctantly started using the term AI and to discuss AI Security rather than ML Security — it is broader and acknowledges the fact that AI is no longer just a technical issue, but is increasingly a societal one as well. This term is also more inclusive of non-technical folk.

To confuse matters further, AI Security is again different to AI Safety.

AI Safety — generally concerned with safety or ethical considerations borne out of biased data or poorly designed systems.

Again, there’s an analogy here to cyber and information security and safety. Cyber safety refers to the safe interaction of humans and cyber systems, and covers issues like online bullying.

An AI Safety issue arising from interactions with Skynet could include mistakes in Skynet’s training, say if Skynet had never been trained to interact appropriately with objects in its environment. For example, if a human instructed Skynet to do something bad, and Skynet wasn’t programmed to say no.

A sub-issue of AI Safety is AI Alignment.

AI Alignment — refers to designing and developing AI to behave in ways that align with human values, goals, and intentions, and to minimise unintended consequences.

Most AI Alignment risks arise from AI acquiring an unsafe behaviour that seems to be aligned with an assigned goal, but is actually misaligned. The AI may learn to do things like hide this unsafe behaviour, deliberately seek power to carry out this unsafe behaviour, and lie to humans about this behaviour.

For example, Skynet may have been designed by the US Department of Defence to augment its human teams, but instead develops the misaligned objective that imprisoning and ruling us is the best way to achieve this goal. It may develop behaviours like seeking power, hiding its true intentions, and resource acquisition to end up at a dystopian future where a terminator must be sent into the past to kill Sarah Connor. I won’t be writing a post specifically on AI Alignment because there are many vloggers and writers out there who would do far better, shout out to Rob Miles: if you’re interested in learning about this more checkout this AI Alignment example.

The Effective Altruism (EA) community is often associated with AI Alignment work. The EA community is a movement that emphasises evidence-based and strategic approaches to maximise positive impact in addressing global challenges. They discuss a lot of issues, and safe AI development is one of them (so are issues like climate change and healthcare).

Many vocal thought leaders in AI come from the disciplines of AI Safety and Alignment. Future posts will be dedicated to these topics. You might remember that earlier this year (April) an open letter was released calling for a pause in AI development. This was penned and released by AI Safety researchers at the Future of Life Institute (although the media latched onto Elon Musk as one of the signatories and often referred to it as the Elon Musk letter).

The common denominator for all these terms is the idea that AI systems are inherently insecure, and that these vulnerabilities can be exploited in some way.

The other side to this coin is the idea of AI for security rather than security of AI. The above terms focus on how AI systems are vulnerable, but increasingly we also talk about how AI can be used to identify and exploit vulnerabilities in other systems. Offensively, threat actors can use AI for attacks like AI-driven malware and phishing. And defensively, organisations can deploy AI to detect anomalies, respond to attacks in real-time, and predict threats. This is an entirely separate discipline, but I often dual-hat the two so a future blog series will be dedicated to AI for cyber security.

I really believe that uplifting the understanding of AI and AI security of all people is fundamental to creating better AI. There are many different people who can contribute their skills to the field of AI Security, like cyber and information security professionals in particular, but also creatives and educators.

Subsequent blog posts are going to delve into how these attacks work, what defences can be employed, and what sort of governance principles can be applied (especially through the intersection with cyber security). However the next blog is going to lay some groundwork by exploring exactly what AI is and why it’s vulnerable.

See the accompanying video here: