Intro to AI Security Part 8 — AI for Cyber Security

Now I’m finally going to touch on the other hat of ‘AI Security’ — and that is AI for cyber security, rather than security of AI.

Many people I speak to don’t quite understand how these two applications are different, and I don’t want to be ‘that person’ but in my opinion this difference is stark and significant. The previous blog posts in this series have focused on AI as an attack surface, and explored the different ways that AI systems can be disrupted, deceived or disclose sensitive information. Using AI for cyber security is totally different because here we are looking at cyber systems (computers and networks) as the attack surface and the potential use (or misuse) of AI systems to exploit vulnerabilties in these systems. And to clarify, AI systems are not the same as cyber systems and if you’re not sure why I recommend you read the previous blogs. However, I couldn’t write a blog series without touching on this topic because this is what most people think I’m talking about whenever I use ‘AI’ and ‘security’ in the same sentence. This blog is going to clarify how useful AI can be at preventing cyber attacks, how realistic a threat AI can be for instigating cyber attacks, to discuss the kinds of conversations that are happening at an international level, and to dispell some of the hype and fear raised whenever this topic is raised.

AI for cyber security (detection and prevention)

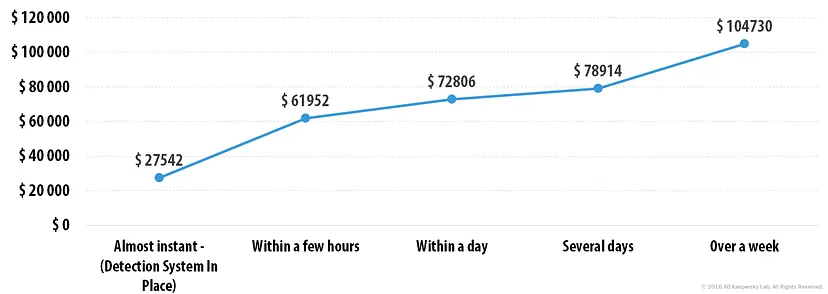

Cyber security is definitely a big problem. Attacks can cause catastrophic economic and safety impacts to all organisations (private and public) around the world. And the cyber security industry, to prevent these kinds of attacks, is worth billions. In 2022, the global market for AI-based cyber security products and services was valued at $15 billion. This is expected to grow to $135 billion by 2030.

The cyber security industry is massive and relatively mature. There are companies that offer services across the gamut of cyber services, from necessary technologies like firewalls, authentication, monitoring and anti-virus to more intensive services like penetration testing and red teaming. Then there are companies that help other organisations stay compliant by implementing risk management and controls based on standards like ISO and NIST. If a company is particularly complicated (and wealthy) they can opt for physical penetration and social engineering services. Many of the products and services offered by cyber security companies include some degree of automation. Much like any other industry, the allure of adding AI capabilities to these offerings is the promise of doing more things in less time, and for less money.

This is much easier said than done, the same challenges that any other organisation has in implementing AI effectively is the same for companies that are already tech-heavy like those offering cyber services. High tech maturity in cyber does not automatically equate to an easier time maturing their AI capability — cyber companies have legacy systems and inefficient processes too. Also, until recently (and it still is), what exactly constitutes ‘AI’ has been very fuzzy. Whever I’m at a conference like Black Hat or CyberCon (our Australian equivalent) I make a point of visiting the stalls of those companies who claim their offerings use AI and I ask them exactly how they use it. They usually look like a deer caught in the headlights and say something about automation (which, as we know, is only one weak component of AI, and to some doesn’t even count as AI). Maybe this is unfair, because I’m talking to salespeople, but people in sales get paid a lot to promote their technologies so I don’t feel too bad. The point is, in my experience many cyber companies use cool technologies to do things, including automation and machine learning, but rarely do they use the kind of AI that most people imagine AI actually is — some sort of Skynet-style intelligence. And to what degree machine learning equals AI is still a matter of debate.

This is not to say that AI for cyber security is not possible or exciting. I am merely dispelling some of the hype around how this topic is framed. Some uses of AI technologies for cyber security that are already being used include:

- Threat Detection and Prevention: these systems can analyse massive amounts of network traffic, user behaviour, and system logs to detect anomalies and potential threats. Machine learning algorithms can learn normal patterns of behaviour and quickly identify deviations that might indicate a cyber attack. This enables early detection and proactive response to potential security breaches.

- Malware Detection: these systems can analyse the behaviour and characteristics of files to identify potentially malicious software or malware. By examining code patterns and comparing files against known malware databases we can identify new and previously unknown threats.

- Phishing and Fraud Detection: these systems can help identify phishing emails, fraudulent websites, and social engineering attacks by analysing text, metadata, sender behaviour, and other indicators. Machine learning models can learn from historical data to improve accuracy in identifying suspicious communication.

- User and Entity Behavior Analytics (UEBA): these systems can build profiles of normal behaviour for users and entities within a system. This data can even include typing patterns and mouse movements. When deviations from these profiles occur, the system can trigger alerts, helping organisations detect insider threats, compromised accounts, or unauthorised activities.

- Security Analytics: these systems can process and correlate vast amounts of security data from various sources, enabling security analysts to identify patterns and potential threats more efficiently. This helps to make quicker and more informed decisions.

- Automated Incident Response: these systems can assist in automating incident response workflows. It can prioritise alerts, suggest possible responses, and execute predefined actions to contain and mitigate threats in real-time.

- Vulnerability Management: these systems can assist in identifying vulnerabilities in software and systems by analysing code and configurations. It can also prioritise vulnerabilities based on potential impact, helping organisations focus their resources on critical issues.

- Adaptive Security: these systems can adapt to evolving threats by continuously learning from new data. This adaptability is crucial in an environment where cyber threats are constantly evolving.

- Network Security: these systems can monitor network traffic in real-time, detecting suspicious activities and unauthorised access attempts. It can also identify patterns associated with Distributed Denial of Service (DDoS) attacks and help mitigate them.

So clearly there are some really beneficial use cases for AI technologies like automation, machine learning and algorithms. Many of these are already being employed in some way. Is this the kind of AI the media depicts, which may escape its organisation and try and control the human race? Absolutely not. Its important to note that while AI will definitely help alleviate the tasks of human workers in this space, it’s not a panacea, and those human workers will still be extremely important.

AI for cyber security (attacks)

While AI for cyber defence is often used a bit like a panic button for a workforce that’s not expanding quickly enough, AI for launching cyber attacks and incidents is used as the fear mongerer. This fear revolves around the possibility for AI systems to be designed by nation states, criminal groups, and terrorists and used to attack, threaten or steal from other nations and organisations.

There are a number of legitimate concerns here, but in my opinion most of them don’t actually relate to AI. As we know, AI encompasses a broad range of technologies that generally help accelerate existing human-based work. However in practise it is rarely (actually never) the omnipotent, omniscient kind of intelligence that comes to mind when people hear the term ‘AI’. The likelihood that some country or criminal group is suddenly going to spin up Skynet and direct it towards your organisation is pretty close to impossible.

However, threat actors do use automation to find vulnerabilities they can exploit faster (ie. port scanning, when you automatically scan for misconfigured ports or points that shouldn’t be connected to the internet at all). Criminals have already been doing this in some form for decades. Threat actors do also use tools like ChatGPT to craft better phishing emails. However, criminals have also been using tools like Google Translate to translate scam messages into the language of choice as long as translation technologies have existed. Criminals also use Generative AI to create realistic images for scams, operations and fraud. However, they have also used photoshop to create fake documents as long as photoshop has been around. In fact, technologies that support the production of realistic fake documents go back as far as humans have wanted to deceive each other (think old timey spies creating fake papers using special papers and dyes in the 1800s). (Btw I’m sick of hearing about Generative AI — blog post incoming on this).

So what’s the point here? For me it’s far less about the technology and more about the environment — both in terms of technology as well as people and process. For instance, while threat actors have access to better technologies over time, the leap in technology we’re experiencing now, in my opinion, is not as dramatic as it is often made out. And all the issues our organisations have in implementing AI is also true of criminal organisations — they find it just as hard to get access to compute! (And they have to hide it — generally). I also believe we need to create an environment that supports creative defence. People will always be the weakest link in cyber security, and investing in the skills and training of our humans is more important now than ever. Our creativity to solve problems and invent novel solutions is also what will become our strategoc advantage over both threat actors and maligned AI.

The conversations around AI at the moment are often analogous to nuclear power in terms of the importance of deterrance (using AI weapons) and the cold conflict we’re experiencing. However in my opinion we still have a long way to go before it is the AI technology itself that is causing this decisive difference.

AI for cyber security investment around the world

Big players in the investment of AI for cyber security include:

- The United States: The US government is investing billions of dollars in AI for cyber security. This includes the creation of the National AI Initiative, which aims to develop and deploy AI technologies to protect critical infrastructure and national security systems. The National Security Agency (NSA) also announced the creation of an AI Security Centre (this makes me very happy). Their Cybersecurity and Infrastructure Security Agency (CISA) has said that AI is “a critical tool” for protecting critical infrastructure from cyberattacks.

- The European Union: the European AI Act constitute by far the strictest regulations around AI use and misuse, and it aims to harmonize AI standards across the EU and promote the use of secure and safe AI technologies.

- China: China is also a major investor in AI for cyber security. They are developing a number of AI-powered cyber security systems, and aim to be the biggest AI player by the year 2030.

Other prominent international organisations that are advocating for the use of AI for cyber security include the World Economic Forum, which has called for a global investment of $1 trillion in AI for cyber security by 2025, and the United Nations.

This year at DEF CON (2023) DARPA announced their new funding initiative — a two year competition that will see teams compete for millions of dollars of prizes to solve cyber challenges using AI.

DEF CON, while traditionally a hacker conference, focused computers, is branching out to AI hacking. This shouldn’t be surprising since it already includes ‘Villages’ for car hacking, RF hacking and lockpicking.

Investment like this is great, and is one way we can stay ahead of the threat actors in the AI x cyber space.

My cynical rant

I know you get the idea — I’m introducing a modest dose of scepticism here — but I need to end on exactly we don’t all need to be panicking about the application of AI to cyber security.

- AI systems can be fooled. AI systems are trained on data, and if that data is not representative of the real world, then the AI system can be fooled. They are also vulnerable to adversarial attacks, as we’ve discussed in previous blogs.

- AI systems are not perfect. AI systems are still under development, and they make mistakes. For example, an AI system may incorrectly identify a benign file as malicious if the confidence level is not appropriately configured or it hasn’t been trained on good enough data.

- AI systems are expensive. The development and deployment of AI systems can be expensive. This may limit the availability of AI-based cyber security solutions to large organisations.

- AI systems require training data. AI systems require training data to learn how to detect and respond to cyber security threats. This data can be difficult and expensive to obtain.

- AI systems are not always reliable. AI systems can be affected by factors such as hardware failures, software bugs, and network outages. This can lead to false positives and false negatives.

Now I’ve gotten that out of they way, the next blog is going to return to AI Security and we’ll start to close out the intro series by discussing how you can upskill in AI Security.