Artificial Intelligence (AI) technology promises a transformational productivity uplift to individuals, organisations and the economy. It is estimated to generate $13 trillion in economic activity globally by 2030 and is rapidly being adopted in various different sectors. In Australia, where we’re writing this from, an astounding 90% of small medium enterprises will be using AI by 2026!

The rapid adoption presents a new risk - that we overlook vulnerabilities of our AI systems which can leave our organisations exposed to potential cyber attacks.

In this article, we want to share three common assumptions organisations make about AI security, the practice of protecting AI systems from attacks. These assumptions prevent organisations from properly addressing their AI security risk.

At the end of the article, we leave you with a set of questions to help your organisation start taking the steps to protect our AI systems.

Assumption 1

Assumption 1: AI developers and engineers have coverage over the cybersecurity risks.

AI and data teams do so much that it's easy to forget that AI teams may not be thinking about cybersecurity with the depth that is needed.

AI is already a massive discipline, requiring knowledge of statistics, business, computer science, software engineering and more. Additionally, the field of cybersecurity is broad, requiring a different mentality and approach. Expecting AI teams to cover both is a lot.

When businesses don't consider that AI security may not currently be a priority for, or in the awareness of, their AI teams, they leave themselves vulnerable to attacks that cost them money and their reputation.

Case Study: Loading models from public repositories can contain malware.

It is a common data science practice to load machine learning models from public repositories. However, unsafe model sharing data formats, such as Pickle, are still being used due to their convenience and popularity.

Following this practice without safeguards can introduce malware and result in executing arbitrary code on production or development environments.

Protect AI, scanned over 400,000 models on commonly used model and data repository (HuggingFace) and found that 3354 models use functions which can execute arbitrary code on model load or inference. 1347 of those models bypass build in security scans.

Case Study: Oligo found thousands of publicly exposed Ray servers compromised.

Ray is a widely open-source AI framework made by Anyscale. One of its core functions is to manage distribution workloads for training AI. It is used in production by Uber, Open AI and Amazon.

Anyscale says that Ray is not intended for use outside of a strictly controlled network environment. However, beginner boilerplate code provided by Anyscale (and other projects) have a setup that results in the Dashboard being publicly available and vulnerable.

Many teams are unaware of this best practice. As a result, Oligo research found thousands of instances of servers being exploited; exposing their organisation’s AI environments including access to their compute, the AI model, training data, DB credentials, SSH keys and third party tokens.

Assumption 2: That traditional cybersecurity practices are enough to protect AI systems.

AI systems have novel vulnerabilities that need to be considered in addition to standard cyber security threats.

AI systems have a stochastic element that traditional, deterministic, software systems don’t have. As a result, current cybersecurity measures have a blind spot to AI weaknesses that haven't necessarily been present in non-AI systems.There are new methods being developed to exploit these AI specific weaknesses.

Many of these methods that can be:

- Easier to do with lower levels of access: many of them only require the attacker to have access to open source artefacts, or access to the AI service as a user.

- Subtler: because these methods are less intrusive, it takes additional intentional effort to detect whether someone is just using the system or is trying to subvert it.

- Lead to consequences that are unexpected: current cybersecurity practices that may not account for these methods as they are relatively new.

There are methods to protect against these methods, but as AI security is an emerging field, not all cybersecurity experts are aware of them. If businesses don’t address these emerging threats to AI Security it could lead to the loss of information and operational efficiency.

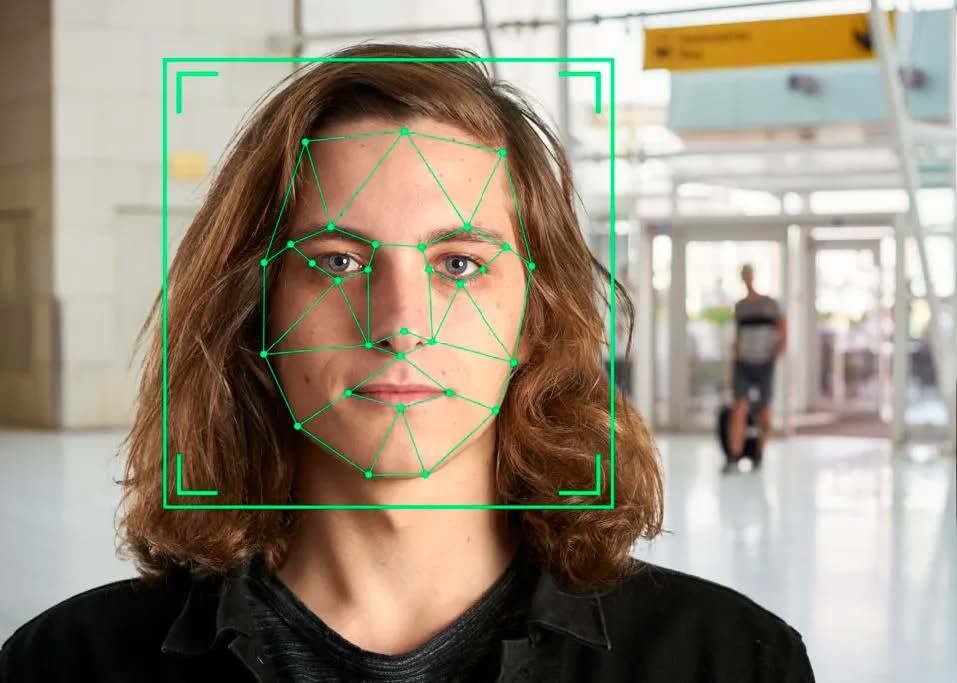

Case Study: Fooling AI-based face recognition biometric authentication

There are various high-tech and low-tech methods to fool facial recognition authentication.

For a low tech example, in 2019, fourth graders were able to unlock Hive Box’s smart locker company by using a printed photo of the intended recipient’s face. Other smart lock models were also found by China’s State Administration for Market Regulation (SAMR) to be able to be compromised by used photographs in SAMR’s investigation that found 15 percent of 40 leading smart lock models they tested could be compromised.

More recently in 2021 China’s State Taxation Administration lost 500 million yuan (~USD 76.2 million) due to fraudulent tax claims as two men spoofed the Administration's facial recognition identity verification system. The hackers manipulated high-definition photographs into videos with techniques to spoof the liveness detection and bypass facial authentication.

Case Study: Replication of AI models resulting in IP theft.

There are techniques to replicate machine learning models without access to the data it was trained on. Many of these techniques use the inputs and outputs of a model - something a person can generate with only a user level access to the target AI model.

Students managed to replicate GPT-2 within 2 days of release. They used openly available data and an estimated $50,000 worth of cloud computing from Google to train the model from scratch. In 2024, a Former Tesla AI Director allegedly replicated the model for $674. It is not known how much it cost OpenAI to develop the original GPT-2 model.

“We demonstrate that many of the results of the paper can be replicated by two masters students, with no prior experience in language modelling. Because of the relative ease of replicating this model, an overwhelming number of interested parties could replicate GPT-2”

Assumption 3: That high-level AI strategies or compliance training provides enough AI Security guidance.

Most AI policies are either;

- Too high-level and is hard to translate into actionable practise to put into place,

- Too lengthy or complicated for time poor businesses; taking too much time to decipher what is relevant to your organisation’s specific context

- Don’t mention AI security at all. If they do use the word ‘secure’ they overlook the complexities of additional AI weaknesses.

It is important to ask your team whether they feel empowered to design, develop, deploy and operate AI systems securely. Organisations without explicit AI security functions are disadvantaged due to the inherent complexities of AI security.

In a survey of businesses in key sectors run by the UK government, micro businesses, organisations using only one AI technology and those who had purchased off-the-shelf AI products were identified to be less likely to not have any cyber security practices specifically for their AI technology.

Overall, that survey found that 60% of businesses that have AI didn’t have, or weren’t sure if they have any specific cyber security processes in place explicitly regarding the AI technology. If you use or develop AI systems, it's important to have good AI security practices.

To quickly test your organisation’s AI security against these assumptions, ask;

Assumption 1: AI developers and engineers have coverage over the cybersecurity risks.

- Do you have cyber security resources focused on assessing the cybersecurity of your AI products?

- Are your AI developers and engineers primed to consider secure practices throughout the design, development, deployment and operation of AI systems?

Assumption 2: That traditional cybersecurity practices are enough to protect AI systems

- Are your cyber security teams aware that AI systems have novel vulnerabilities that need to be considered alongside standard cyber security threats?

- Does your organisation’s cybersecurity practices account for these novel vulnerabilities? (Risk assessments, security audits and threat detection and response)

Assumption 3: That high-level AI strategies or compliance training provides enough AI Security guidance.

- Do you have AI policies or AI training that identify and explore the security of AI?

- Are your policies compliant to the recently developed AI regulations and legislation that applies to you.

- Are your AI and cyber teams able to translate these policies and training into actionable practices?

Reflecting on these questions is the first step in making sure that your AI systems are secure from cyber threats. Another important step is to invest in skills development and ensure a competent workforce. This can be through dedicating resources to train staff on AI security, or bringing in external AI security experts to assess your organisation’s AI security posture while building up capabilities.

AI security is a new and complex threat, and we understand that learning about it can be overwhelming; that's why we have developed a free 30 minute primer on AI security to guide you through it. Additionally, Mileva provides advisory services and training to help both executives and practitioners understand how to implement AI security solutions.

While it may take a village to have secure AI, the benefits of AI make it worth it.