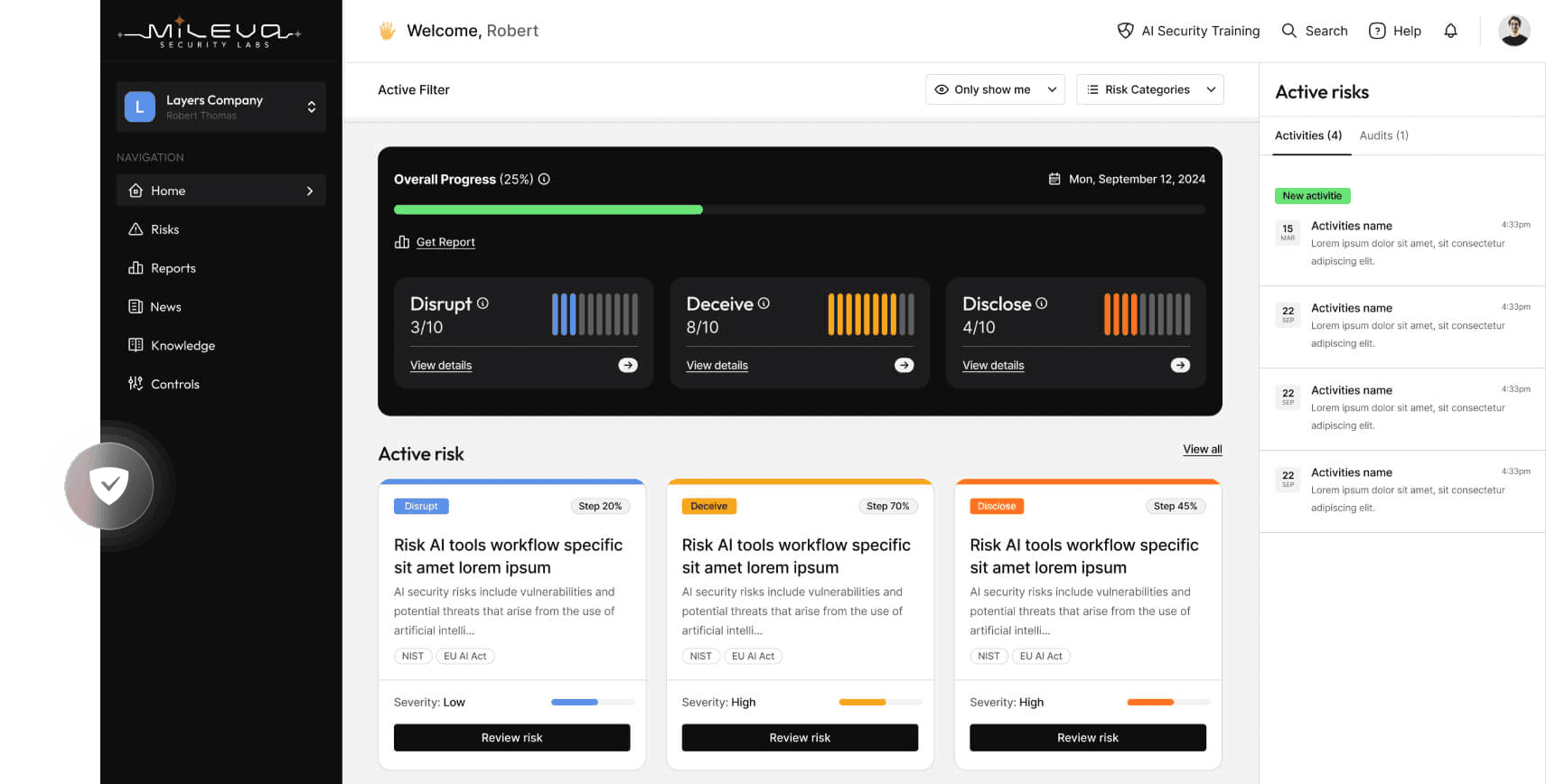

Fortnightly Digest 31 March 2025

Welcome to the sixth edition of the Mileva Security Labs AI Security Digest! We’re so glad to have you here, by subscribing you are helping to create a safe and secure AI future. This fortnight saw a wave of high-severity vulnerabilities disclosed across popular AI developer tools and platforms. Critical CVEs affected LlamaIndex, InvokeAI, DB-GPT, Lunary, and Anything-LLM, with issues ranging from SQL injection and remote code execution to path traversal and privilege escalation. Notably, several vulnerabilities allowed unauthorised file deletion or full database exports, posing serious risks to data integrity and system availability. From this week, CVEs are now grouped by affected technology to help you quickly assess which tools in your stack may require urgent patching or review. In research this fortnight, Anthropic and others push the frontier on AI auditing and interpretability, showing promising early-stage efforts to detect hidden objectives and trace internal model logic. Meanwhile, state actors like North Korea and tools like DarkWatch showcase how adversaries are integrating AI into surveillance, disinformation, and cyber warfare. And in a sobering reminder of AI's unpredictable nature, recent studies suggest some language model-powered agents are already capable of autonomous self-replication. We’ve got a lot to cover, so read on below!