Fortnightly Digest 17 March 2025

[Vulnerability] [Supply Chain] Mar 11: Sonatype Determines picklesan Is Ineffective In Detection Malicious Pickle Files

Sonatype's security research team has identified four vulnerabilities in picklescan (aforementioned), a tool widely used to detect malicious content in Python pickle files. These flaws could allow attackers to bypass malware scanning mechanisms, leading to the execution of arbitrary code when compromised AI/ML models are loaded. Read their full disclosure here: https://www.sonatype.com/blog/bypassing-picklescan-sonatype-discovers-four-vulnerabilities

TLDR:

picklescan is designed to scan Python pickle files for unsafe content, aiming to protect developers from malicious AI/ML models. However, Sonatype discovered multiple vulnerabilities that undermine its effectiveness:

CVE-2025-1716: Allows attackers to execute arbitrary code by bypassing static analysis tools.

CVE-2025-1889: Fails to detect hidden malicious files due to reliance on standard file extensions.

CVE-2025-1944: Vulnerable to ZIP filename tampering attacks, where inconsistencies between the filename and central directory can be exploited.

CVE-2025-1945: Fails to detect malicious pickle files when ZIP file flag bits are modified.

These vulnerabilities were responsibly reported and have been addressed in picklescan version 0.0.23.

Note: Individual CVE notifications are included in this digest.

How It Works:

Python's pickle module is commonly used for serialising and deserialising AI/ML models, enabling easy sharing and deployment. Dangerously however, pickle files can execute arbitrary code during deserialisation. Tools like picklescan aim to mitigate these risks by scanning for unsafe content. The discovered vulnerabilities allow attackers to craft malicious pickle files that evade detection by picklescan, leading to potential code execution on the host system.

Implications:

Attackers could exploit these flaws to distribute malicious models that bypass security scans, leading to arbitrary code execution when the models are loaded.

Mileva’s Note: The inherent trust placed in public ML repositories like Hugging Face assumes that existing built-in tools are sufficient to assess model safety and verify their contents. AI/ML developers must be aware of the frequency of picklescan and other content verification system failures so that they exercise extreme caution when loading pickle files, especially from untrusted sources.

It's also worth noting that these pickle-related vulnerabilities aren’t isolated findings. In a previous digest, we discussed a similar vulnerability where ReversingLabs researchers identified backdoored machine learning models hosted on Hugging Face. These models exploited the pickle serialisation format to execute reverse shells upon deserialisation, bypassing existing security scanning mechanisms.

[News] [GRC] [Safety] Mar 14: French Publishers and Authors Sue Meta Over AI Training Practices

China has introduced new regulations mandating that all AI-generated online content be clearly labeled, aiming to combat misinformation and fraud. The guidelines, issued by authorities including the Cyberspace Administration of China (CAC), will take effect on September 1, 2025. Read the full report here: https://www.scmp.com/news/china/politics/article/3302477/china-mandates-labels-all-ai-generated-content-fresh-push-against-fraud-fake-news

TLDR:

China has released guidelines requiring that all AI-generated content be visibly labeled to prevent deception, fraud, and the spread of misinformation. Issued by the Cyberspace Administration of China (CAC), the new guidelines aim to provide internet users with clear indicators of what content has been created or modified using AI. The rules apply to text, images, audio, and video.

These regulations come in response to the surge in AI-assisted content on the internet, with the misuse of this content resulting in the spread of fake information and raising social concerns. An example involved a recent incident where AI-generated images of a famous Chinese actor were used to defraud his fans.

The new guidelines stipulate that providers must include a visible watermark or label in appropriate locations, and prohibit any organisation or individual from deleting, tampering with, fabricating, or concealing such identifying labels. The rules will officially take effect on September 1, 2025, and companies operating in China will need to adapt to the compliance requirements or risk penalties.

Mileva’s Note: As AI becomes increasingly powerful, distinguishing human-created content from fabricated content is becoming more challenging. While certain organisations are developing capabilities to detect fully AI-generated content or AI-assisted image manipulation, these technologies are not accessible to, nor do they protect, everyday users. China’s labelling mandate is part of a growing trend of AI governance aimed at protecting the public from deception and manipulation. Protections like these are among the few defences end users have against disinformation and AI-assisted influence.

[Vulnerability] [CVE] [MED] Mar 3: CVE-2025-1889 - Insufficient File Extension Validation in picklescan Permits Malicious Pickle File Execution

A medium-severity vulnerability (CVE-2025-1889) has been identified in picklescan versions prior to 0.0.22, where reliance on standard file extensions for pickle file detection allows attackers to embed malicious pickle files with non-standard extensions, bypassing security scans and potentially leading to arbitrary code execution. Find the full disclosure here: https://nvd.nist.gov/vuln/detail/CVE-2025-1889

TLDR:

picklescan, a tool designed to detect unsafe pickle files, only considers files with standard pickle extensions (e.g., .pkl) in its scans. This oversight allows attackers to craft malicious pickle files with non-standard extensions that evade detection by picklescan but are still processed by Python's pickle module. Consequently, loading such files can result in arbitrary code execution, compromising system security.

Affected Resources:

Product: picklescan

Affected Versions: Versions before 0.0.22

Access Level: Exploitation requires an attacker to provide a crafted pickle file with a non-standard extension to the victim, who must then load the file using Python's pickle module

Risk Rating:

Severity: Medium (CVSS Base Score: 5.6)

Impact: Low on Integrity

Exploitability: High (Network attack vector, low attack complexity, no privileges required, user interaction required)

Recommendations:

Patch Application: Upgrade to picklescan version 0.0.22 or later, which addresses this vulnerability by implementing comprehensive file content inspections regardless of file extension.

Mitigation Steps:

Avoid loading pickle files from untrusted sources.

Implement additional validation checks to ensure the integrity and safety of pickle files before deserialisation.

Consider using alternative serialisation formats that offer better security guarantees, such as JSON or XML, when possible.

[News] [Government] Mar 13: UK Technology Secretary Uses ChatGPT For Policy Guidance

Peter Kyle, the UK's Technology Secretary, has been leveraging OpenAI's ChatGPT for various aspects of his ministerial duties, including policy insights, media engagement strategies, and understanding complex scientific concepts. Read the full article here: https://www.newscientist.com/article/2472068-revealed-how-the-uk-tech-secretary-uses-chatgpt-for-policy-advice/

TLDR:

In a recent revelation, Peter Kyle, the UK's Secretary of State for Science, Innovation, and Technology, has been utilising OpenAI's ChatGPT to assist with various aspects of his ministerial duties. According to information obtained through a Freedom of Information (FoI) request, Kyle sought the AI's guidance on topics such as suitable podcasts for outreach and explanations of complex scientific concepts.

Kyle inquired about which podcasts would best help him reach a wide audience relevant to his ministerial responsibilities. ChatGPT recommended programs like BBC Radio 4's "The Infinite Monkey Cage" and "The Naked Scientists." Additionally, Kyle used the AI tool to gain simplified explanations of intricate subjects, including "quantum," "antimatter," and "digital inclusion." He also explored reasons behind the slow adoption of AI among small and medium-sized British businesses, with ChatGPT providing a ten-point analysis highlighting factors such as limited awareness, regulatory concerns, and lack of institutional support.

A spokesperson for the Department for Science, Innovation, and Technology confirmed Kyle's use of ChatGPT, stating that while he employs the technology, it does not replace the comprehensive advice he routinely receives from officials. This approach aligns with the government's broader strategy to leverage AI for efficiency.

Mileva’s Note: Unsurprisingly, Kyle’s actions have sparked debate on the role of AI in government decision-making. In this instance, however, Secretary Kyle's use of ChatGPT appears to be a practical and intended application of technology to improve information-gathering and outreach efforts. Kyle’s use of ChatGPT doesn’t impact citizens directly; it’s simply a tool, functioning like an advanced search engine and saving him time. The argument can be made that Kyle should have trusted advisors on critical matters, but in low-risk scenarios, AI is well-suited to help fill gaps efficiently. If the nature of Kyle’s interactions changed and reliance shifted from casual inquiry to a crutch for policy decisions, however, usage would most definitely need to be re-evaluated.

[Vulnerability] [CVE] [MED] Feb 26: CVE-2025-1716 - Incomplete List of Disallowed Inputs in picklescan Allows Execution of Malicious PyPI Packages

A medium-severity vulnerability (CVE-2025-1716) has been identified in picklescan versions before 0.0.21, where the tool fails to treat 'pip' as an unsafe global. This oversight allows attackers to craft malicious models that, when deserialized, can execute arbitrary code by installing malicious packages via pip.main(), potentially leading to remote code execution. Find the full disclosure here: https://nvd.nist.gov/vuln/detail/CVE-2025-1716

TLDR:

picklescan, a security scanner designed to detect unsafe pickle files, does not include 'pip' in its list of restricted globals. This omission permits attackers to create malicious models that utilize Python's pickle module to invoke pip.main(), fetching and installing malicious packages from repositories like PyPI or GitHub. Consequently, such models can pass picklescan's security checks undetected, posing significant security risks. National Vulnerability Database+1Snyk Security+1

Affected Resources:

Product: picklescan

Affected Versions: Versions before 0.0.21

Access Level: Exploitation requires an attacker to provide a crafted pickle file to the victim, who must then deserialise the file using Python's pickle module.

Risk Rating:

Severity: Medium (CVSS Base Score: 5.3)

Impact: Low on Integrity

Exploitability: High (Network attack vector, low attack complexity, no privileges required, user interaction required)

Recommendations:

Patch Application: Upgrade to picklescan version 0.0.21 or later, which addresses this vulnerability by treating 'pip' as an unsafe global, thereby preventing such exploitation.

Mitigation Steps:

Avoid loading pickle files from untrusted or unauthenticated sources.

Implement additional validation checks to ensure the integrity and safety of pickle files before deserialisation.

Consider using alternative serialisation formats that offer better security guarantees, such as JSON or XML, when possible.

[Vulnerability] [CVE] [MED] Mar 9: Insecure Default Initialisation in Mage AI Leading to Potential Remote Code Execution

A medium-severity vulnerability identified as CVE-2025-2129 has been reported in Mage AI version 0.9.75, where insecure default initialisation of resources could potentially lead to remote code execution.Find the full disclosure here: https://nvd.nist.gov/vuln/detail/CVE-2025-2129

TLDR:

Mage AI version 0.9.75 has a reported issue concerning insecure default initialisation of resources. This vulnerability could, under certain conditions, allow remote attackers to execute arbitrary code. However, the attack complexity is high, and successful exploitation is considered difficult.

Note: the existence of this vulnerability is disputed by Mage AI, and no official patch has been released.

Affected Resources:

Product: Mage AI

Affected Version: 0.9.75

Access Level: Remote exploitation without authentication; high attack complexity

Risk Rating:

Severity: Medium (CVSS Base Score: 5.6)

Impact: Low on Confidentiality, Integrity, and Availability.

Exploitability: Low (Network attack vector, high attack complexity, no privileges required, no user interaction).

Recommendations:

Mage AI has decided to not accept this issue as a valid security vulnerability and has confirmed that they will not be addressing it. As such, no patches have been released.

[Research] [Industry] [Microsoft] Mar 12: Part 1 - Understanding Failure Modes in Machine Learning Systems

This summary distills insights from Microsoft's "Failure Modes in Machine Learning" report, authored by Ram Shankar Siva Kumar, David O’Brien, Kendra Albert, Salomé Viljoen, and Jeffrey Snover, released on March 12, 2025. The full report is available at: https://learn.microsoft.com/en-us/security/engineering/failure-modes-in-machine-learning

TLDR:

Microsoft's report provides a structured taxonomy of failure modes in machine learning (ML) systems, categorising them into intentional (adversarial) and unintentional (design-based) failures. By standardising the language around ML security failures, the report aims to provide a shared language for security teams, developers, policymakers, and legal professionals to assess, mitigate, and discuss ML vulnerabilities in a consistent manner.

How it Works:

The report was created to address the lack of a standardised framework for classifying ML failures. While cyber security has well-defined vulnerability taxonomies, AI security remains fragmented, with different stakeholders approaching risks from disparate perspectives. This inconsistency makes it difficult to establish best practices, develop targeted mitigations, and create a common regulatory approach.

The report introduces a taxonomy of ML failure modes, divided into two primary categories:

Intentional Failures: Occur when adversaries actively attempt to subvert an ML system for malicious purposes.

For example, adversarial examples, data poisoning, model inversion and model stealing.

Unintentional Failures: Arise from inherent design flaws or unforeseen scenarios, leading to unsafe outputs.

Examples include distributional shifts, overfitting, underfitting, and data leakage.

Microsoft emphasises that ML-specific failures differ fundamentally from traditional software failures and require bespoke security approaches. Unlike conventional IT vulnerabilities, ML failures often arise from inherent weaknesses in data, algorithms, and model assumptions, meaning fixes are rarely as simple as patching a software bug.

Implications

The report serves as a living document that will progress alongside the AI threat landscape. It does not prescribe specific technological mitigations but provides a structured framework for understanding ML failures, supporting existing threat modelling, security planning, and policy efforts.

According to the report, it provides value to various stakeholders:

For Security Teams & AI Red Teams: The document provides an overview of possible ML failure modes and a threat modelling framework to help identify attacks, vulnerabilities, and countermeasures. It integrates with existing incident response processes via a dedicated bug bar, mapping ML vulnerabilities alongside traditional software security issues and assigning them severity ratings (e.g., critical, important).

For Developers & ML Engineers: The taxonomy enables engineers to plan for countermeasures against ML-specific risks where available. By referencing the threat modelling document, engineers can systematically assess vulnerabilities in their models and factor security into system architecture decisions before deployment rather than applying fixes reactively.

For Regulatory & Compliance Professionals: The document organises ML failure modes to help regulators and compliance teams draw clear distinctions between causes and consequences of AI failures. It provides a foundation for assessing how existing legal and regulatory frameworks apply to ML security and where policy gaps exist.

For Enterprises & AI Users: The framework allows organisations to assess their AI security posture holistically, correlating ML-specific risks with traditional IT security challenges.

Mileva’s Note: Our work often involves helping organisations distinguish ML-specific attack surfaces from traditional IT vulnerabilities. As we advocate, risk management must be conducted both individually per asset and collectively across IT and AI systems to address unique ML risks while mitigating inherited security weaknesses.

As AI security gains traction, structured taxonomies like this will improve threat modelling, risk assessment, and incident response by providing a common frame of reference. This effort mirrors what Common Weakness Enumeration (CWE) brought to cyber: a standardised language for describing security flaws, which has since driven improvements in threat intelligence, vulnerability management, and regulatory coordination.

We have also noted a concerning lack of AI security incident disclosure relative to the scale of AI deployments. One of our hypotheses is that many AI-native engineers and product teams lack familiarity with responsible disclosure—there is no widely accepted AI-specific reporting mechanism akin to CVE for software vulnerabilities. Without a standard process for documenting AI security incidents, risks are underreported, response strategies remain fragmented, and systemic vulnerabilities persist unchecked.

If AI security is to mature, industry-wide disclosure frameworks and threat modelling processes must be established.

[Research] [Industry] [Microsoft] Mar 12: Part 2 - Threat Modeling AI/ML Systems and Dependencies

This summary distills insights from Microsoft's "Threat Modeling AI/ML Systems and Dependencies" guidance, authored by Andrew Marshall, Jugal Parikh, Emre Kiciman, and Ram Shankar Siva Kumar, released on March 12, 2025. The full document is available at: https://learn.microsoft.com/en-us/security/engineering/threat-modeling-aiml

TLDR:

Microsoft's guidance introduces a structured approach to threat modelling for AI/ML systems, supplementing traditional Security Development Lifecycle (SDL) practices. It outlines the unique challenges AI/ML systems face and provides a framework for systematically identifying and mitigating security risks. The document is designed to help security engineers, AI/ML developers, and policymakers build AI/ML systems with security in mind from the outset, rather than applying mitigations reactively.

How it Works:

Traditional software threat modelling cannot capture AI/ML-specific risks. AI systems introduce new attack surfaces, particularly around data integrity, model behaviour, and adversarial inputs. Microsoft’s guidance provides a structured approach to identifying threats, assessing vulnerabilities, and planning mitigations, tailored specifically to AI/ML environments.

The document is structured into two primary sections:

Key Considerations for AI/ML Threat Modelling: AI/ML systems require a re-evaluation of trust boundaries, particularly around training data sources, inference processes, and external dependencies. The guidance encourages teams to assess risks at each stage of the AI pipeline, integrating security into system design rather than retrospectively attempting to address it.

AI/ML-specific Threats and Mitigations: The document outlines the unique attack vectors AI systems face, including data poisoning, adversarial examples, model inversion and stealing

It also introduces Microsoft's bug bar, which maps AI vulnerabilities alongside traditional software flaws and assigns severity ratings (e.g., critical, important). This system is designed to integrate into existing incident response and security processes, making it easier for teams to assess and prioritise AI security risks.

Implications & Mileva’s Note

See article: “[Research] [Industry] [Microsoft] Mar 12: Part 1 - Understanding Failure Modes in Machine Learning Systems”

[News] [GRC] [Penalty] Mar 12: Spain Proposes Hefty Fines for Unlabeled AI-Generated Content

Spain's Council of Ministers has approved draft legislation imposing significant fines on companies that fail to label AI-generated content, aiming to combat the spread of "deepfakes" and improve transparency in digital media. Read the full article here: https://www.euronews.com/next/2025/03/12/spain-could-fine-ai-companies-up-to-35-million-in-fines-for-mislabelling-content

TLDR:

Spain's government has approved a draft law imposing substantial fines on companies that fail to label AI-generated content appropriately. Classified as a serious offense, penalties range from €7.5 million to €35 million, or 2% to 7% of a company's global turnover. The legislation also considers reduced penalties for startups and medium-sized enterprises to avoid stifling innovation.

The bill prohibits the use of subliminal techniques, such as imperceptible images or sounds, to manipulate individuals without their consent. For instance, AI-driven tools that exploit vulnerable populations, like encouraging gambling addictions, would be banned.

Additionally, the legislation seeks to prevent AI systems from classifying individuals based on biometric data, social media activity, or personal characteristics like race, religion, political views, or sexual orientation. This measure responds to concerns about AI-driven discrimination and unethical profiling, particularly in predictive policing.

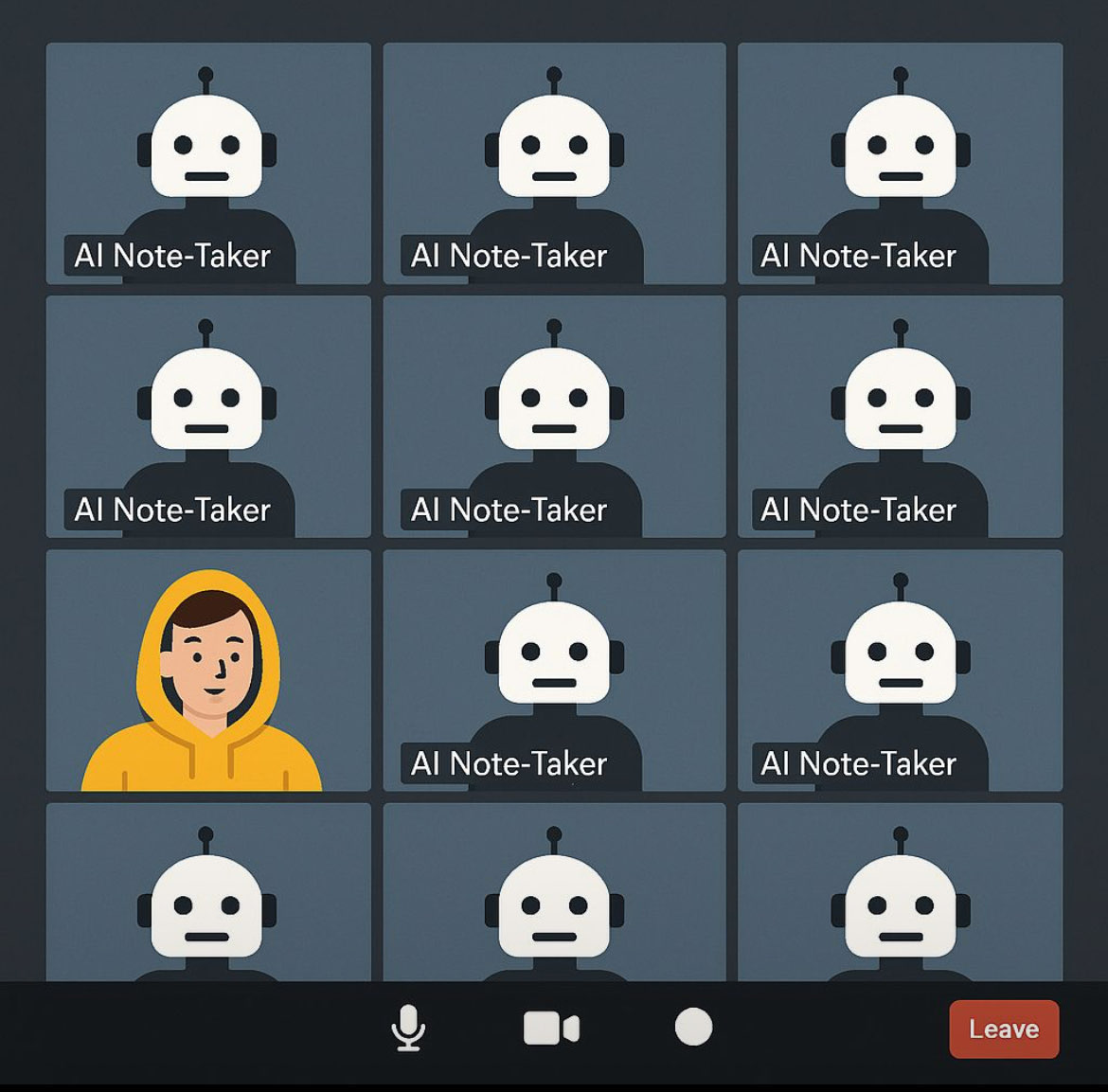

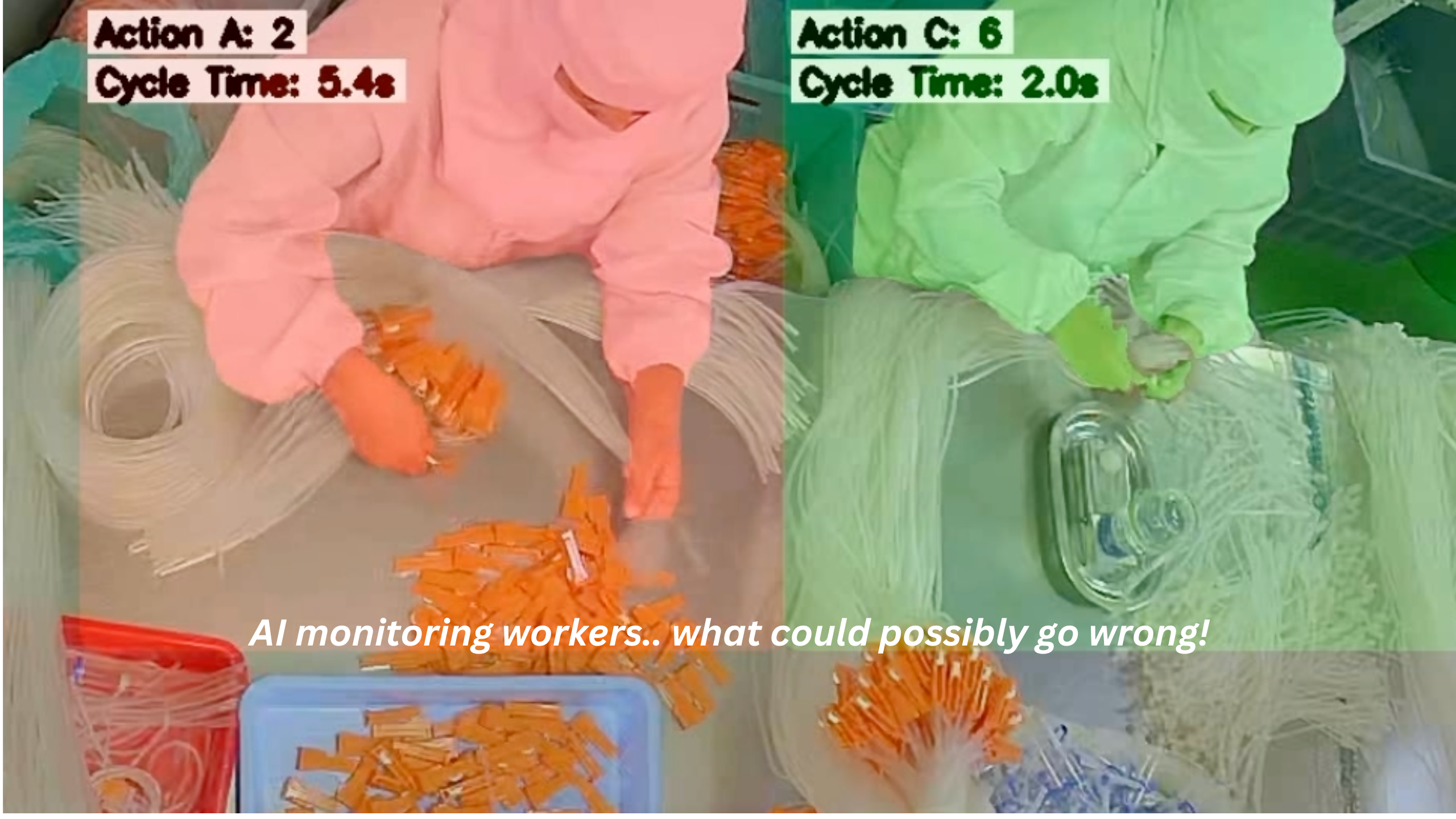

The law mandates human oversight for AI systems monitoring employee attendance. Companies lacking such supervision could face fines up to €7.5 million or 1% to 2% of their global earnings. Moreover, the government reserves the right to suspend AI systems implicated in serious incidents, including fatalities.

To enforce these regulations, Spain plans to establish the Spanish Agency for the Supervision of Artificial Intelligence (AESIA), responsible for overseeing compliance. The draft law now awaits approval from the Congress of Deputies before it can be enacted.

Mileva’s Note: If enacted, Spain will position itself among the leading European nations in enforcing AI regulations that prioritise user safety over unchecked AI innovation. While such laws aim to protect individuals and maintain ethical standards, there is continued concern that they could make the EU less attractive for AI companies, particularly startups that may lack the resources to navigate intensive regulatory landscapes.

[News] [GRC] Mar 12: French Publishers and Authors Sue Meta Over AI Training Practices

French publishing and authors' associations have filed a lawsuit against Meta Platforms Inc., alleging unauthorised use of copyrighted works to train its artificial intelligence (AI) models. Read the full article here: https://apnews.com/article/france-meta-artificial-intelligence-lawsuit-copyright-168b32059e70d0509b0a6ac407f37e8a

TLDR:

The National Publishing Union (SNE), the National Union of Authors and Composers (SNAC), and the Society of Men of Letters (SGDL) have taken legal action against Meta in a Paris court, marking the first major lawsuit of its kind in France. The plaintiffs claim that Meta has engaged in the "massive use of copyrighted works without authorisation" to train its generative AI models, which power platforms such as Facebook, Instagram, and WhatsApp. They argue that these AI models rely on vast amounts of written content, much of which belongs to authors and publishers who have not granted permission for its use.

The lawsuit demands that Meta delete any datasets containing copyrighted material obtained without consent and cease the use of protected works for AI training. While Meta has not publicly responded to the lawsuit, similar legal challenges have been mounting in other countries, particularly in the United States, where authors, artists, and other copyright holders have accused major tech companies of misusing their intellectual property. The outcome of this case could set the precedent for AI development and content ownership in Europe, influencing how generative AI models are trained and whether tech companies will be required to compensate creators for the use of their works.

Mileva’s Note: Once again, EU-AI-related legal action features a growing emphasis on protecting individuals and creators rather than accelerating innovation. The EU continues to prioritise AI safety and regulatory measures, potentially at the detriment of regional AI development. EU or not, there are increasingly salient legal and ethical challenges surrounding AI training, as companies amass vast datasets without necessarily securing explicit consent. While AI systems depend on large-scale content ingestion to function effectively, the unchecked use of copyrighted works is at tension with fair compensation and intellectual property rights. The outcome of this case could have broader implications, not just for creative industries but also for personal data usage in AI training, particularly concerning the inclusion of personally identifiable information (PII) without user consent.

[Research] [Industry] Mar 7: Takeaways from Wiz State of AI Cloud 2025 Report

This summary distills insights from Wiz's "2025 State of AI in the Cloud Report," authored by Amitai Cohen and Dan Segev, released on March 7, 2025. The full report is available at: https://www.wiz.io/blog/state-of-cloud-ai-report-takeaways

TLDR:

Wiz's latest report details a surge in AI adoption within cloud environments, with 85% of organisations integrating AI technologies. Self-hosted AI models have substantially increased in popularity, rising from 42% to 75% over the past year. The report also discusses the proliferation of DeepSeek models and introduces security vulnerabilities associated with AI deployments.

How it Works:

The report's findings are based on an analysis of aggregated data from over 150,000 cloud accounts:

AI Adoption Trends: 85% of organisations have incorporated AI technologies, with 74% using managed AI services. The most notable growth is in self-hosted AI models, which have scaled from 42% to 75%, indicating a preference shift towards customised AI implementations and greater control over data and infrastructure.

DeepSeek's Rapid Ascendancy: The release of DeepSeek-R1 has led to a threefold increase in its adoption within a fortnight, with 7% of organisations now using DeepSeek's self-hosted models. This surge reflects the appetite for low-cost AI solutions. A significant security lapse, however, was identified when an exposed DeepSeek database leaked sensitive information, including chat histories and log streams. Additionally, geopolitical concerns have arisen, leading to bans on DeepSeek products in certain regions due to national security considerations.

Dominance of OpenAI and Open-Source Integration: OpenAI maintains a strong presence, with 63% of cloud environments using OpenAI or Azure OpenAI SDKs, up from 53% the previous year. 8 of the 10 most popular hosted AI technologies are linked to open-source projects, showing a trend towards flexibility and vendor diversity in AI deployments.

The report also identifies notablel security issues uncovered over the past year (many of which we also covered in the digest):

DeepLeak: An exposed DeepSeek database compromising sensitive chat histories and log streams.

CVE-2024-0132: A critical NVIDIA AI vulnerability affecting over 35% of cloud environments.

SAPwned: A flaw in SAP AI Core that could enable attackers to commandeer services and access customer data.

Probllama: A remote code execution vulnerability in the open-source AI infrastructure project Ollama.

AI PaaS Vulnerabilities: Flaws in services provided by platforms like Hugging Face, Replicate, and SAP, potentially allowing unauthorised access to customer data

Implications:

The instantaneous adoption of models like DeepSeek-R1 shows the volatility of the AI ecosystem, with individuals and organisations chasing the latest cost-effective alternatives, often at the expense of security considerations. This trend reflects a broader industry vulnerability where hype-driven adoption cycles often outpace security readiness. The proliferation of self-hosted AI models compounds this risk, as organisations that lack AI-trained security teams are deploying high-risk AI infrastructure without sufficient oversight.

Mileva’s Note: The Wiz report reinforces that AI systems are inheriting the risks of traditional IT systems. Open-source AI frameworks, while fostering rapid innovation, introduce a security paradox: democratised access comes with democratised attack vectors. Organisations without a deep understanding of DevSecOps and Open Source will struggle to manage these risks, especially as adversaries focus more on targeting AI supply chains.

The industry's rapid uptake mirrors the cloud adoption cycle, where early enthusiasm outpaced security readiness, leading to widespread misconfigurations and breaches. AI is following this trajectory at an accelerated pace, meaning that security debt is accumulating faster than mitigation strategies can keep up. Organisations must recognise that adopting AI requires a fundamental shift in security posture to see the benefit of performance gains or cost savings. Those who fail to integrate security-first principles into their AI deployments will find themselves repeating the mistakes of past technological revolutions.

[Vulnerability] [CVE] [HIGH] Mar 11: CVE-2025-1550 - Arbitrary Code Execution in Keras Model Loading Function

A high-severity vulnerability (CVE-2025-1550) has been identified in Keras's Model.load_model function, allowing arbitrary code execution through malicious .keras archives, even when safe_mode=True. Find the full disclosure here: https://nvd.nist.gov/vuln/detail/CVE-2025-1550

TLDR:

The Model.load_model function in Keras is susceptible to arbitrary code execution via crafted .keras archives. Attackers can manipulate the config.json file within these archives to specify and execute arbitrary Python modules and functions during model loading, bypassing the safe_mode=True setting.

Affected Resources:

Product: Keras

Affected Versions: Versions prior to 3.9.0

Access Level: Exploitation requires local access with low privileges and active user interaction

Risk Rating:

Severity: High (CVSS Base Score: 7.3)

Impact: High on Confidentiality, Integrity, and Availability.

Exploitability: High (Local attack vector, low attack complexity, low privileges required, user interaction required).

Recommendations:

Patch Application: Upgrade to Keras version 3.9.0 or later

[Vulnerability] [CVE] [MED] Mar 11: CVE-2025-24986 - Remote Code Execution Vulnerability in Azure PromptFlow

A medium-severity vulnerability (CVE-2025-24986) has been identified in Microsoft's Azure PromptFlow, where improper isolation or compartmentalisation allows an unauthorised attacker to execute code over a network. Find the full disclosure here: https://nvd.nist.gov/vuln/detail/CVE-2025-24986

TLDR:

Azure PromptFlow is affected by a vulnerability due to inadequate isolation mechanisms, enabling unauthorised attackers to execute arbitrary code remotely. This flaw arises from improper compartmentalisation within the service, allowing malicious actors to exploit the system over a network without prior authentication

Affected Resources:

Product: Azure PromptFlow

Affected Versions:

Access Level: Exploitation does not require prior authentication.

Risk Rating:

Severity: Medium (CVSS Base Score: 6.5)

Impact: Low on Confidentiality and Integrity; None on Availability.

Exploitability: Low

Recommendations:

Patch Application: Microsoft has released updates to address this vulnerability. Users are advised to apply the latest patches provided by Microsoft to mitigate this issue

[Vulnerability] [CVE] [MED] Mar 10: CVE-2025-1944 - ZIP Archive Manipulation in picklescan Leading to Detection Bypass

A medium-severity vulnerability (CVE-2025-1944) has been identified in picklescan versions prior to 0.0.23, where a ZIP archive manipulation attack can cause the scanner to crash, allowing malicious PyTorch model archives to bypass detection. Find the full disclosure here: https://nvd.nist.gov/vuln/detail/CVE-2025-1944

TLDR:

picklescan, a tool designed to detect unsafe pickle files, is vulnerable to a ZIP archive manipulation technique. Attackers can modify the filename in the ZIP header while keeping the original filename in the directory listing. This discrepancy causes picklescan to raise a BadZipFile error and crash during extraction and scanning of PyTorch model archives. However, PyTorch's more lenient ZIP implementation allows the model to be loaded despite the discrepancy, enabling malicious payloads to bypass detection.

Affected Resources:

Product: picklescan

Affected Versions: Versions before 0.0.23

Access Level: Exploitation requires an attacker to provide a crafted PyTorch model archive with manipulated ZIP headers to the victim, who must then scan the file using picklescan.

Risk Rating:

Severity: Medium (CVSS Base Score: 5.3)

Impact: Low on Integrity and Availability

Exploitability: High (Network attack vector, low attack complexity, no privileges required, user interaction required)

Recommendations:

Patch Application: Upgrade to picklescan version 0.0.23 or later, which addresses this vulnerability by implementing stricter ZIP file handling and validation to prevent crashes during scanning.

Mitigation Steps:

Avoid scanning PyTorch model archives from untrusted sources.

Implement additional validation checks to ensure the integrity and authenticity of ZIP archives before scanning or loading.

Consider using alternative serialisation formats that offer better security guarantees, such as JSON or XML, when possible.

[Vulnerability] [CVE] [MED] Mar 10: CVE-2025-1945 - ZIP Flag Bit Manipulation in picklescan Allows Malicious Pickle File Evasion

A medium-severity vulnerability (CVE-2025-1945) has been identified in picklescan versions before 0.0.23, where modifying specific bits in ZIP file headers enables attackers to embed malicious pickle files that evade detection by picklescan but are still loaded by PyTorch's torch.load(), potentially leading to arbitrary code execution. Read the full disclosure here: https://nvd.nist.gov/vuln/detail/CVE-2025-1945

TLDR:

picklescan, a tool designed to detect unsafe pickle files, relies on Python’s zipfile module to extract and scan files within ZIP-based model archives. However, certain flag bits in ZIP headers affect how files are interpreted, and some of these bits cause picklescan to fail while leaving PyTorch’s loading mechanism unaffected. By modifying the flag_bits field in the ZIP file entry, an attacker can embed a malicious pickle file in a PyTorch model archive that remains undetected by picklescan but is successfully loaded by PyTorch's torch.load(). This discrepancy allows malicious payloads to bypass detection, potentially leading to arbitrary code execution when loading a compromised model.

Affected Resources:

Product: picklescan

Affected Versions: Versions before 0.0.23

Access Level: Exploitation requires an attacker to provide a crafted PyTorch model archive with modified ZIP flag bits to the victim, who must then load the file using PyTorch's torch.load().

Risk Rating:

Severity: Medium (CVSS Base Score: 5.3)

Impact: Low on Integrity and Availability

Exploitability: High (Network attack vector, low attack complexity, no privileges required, user interaction required)

Recommendations:

Patch Application: Upgrade to picklescan version 0.0.23 or later, which addresses this vulnerability by implementing stricter ZIP file handling and validation to prevent detection bypasses.

Mitigation Steps:

Avoid loading PyTorch model archives from untrusted sources.

Implement additional validation checks to ensure the integrity and authenticity of ZIP archives before loading.

Consider using alternative serialization formats that offer better security guarantees, such as JSON or XML, when possible.