Fortnightly Digest 21 January 2025

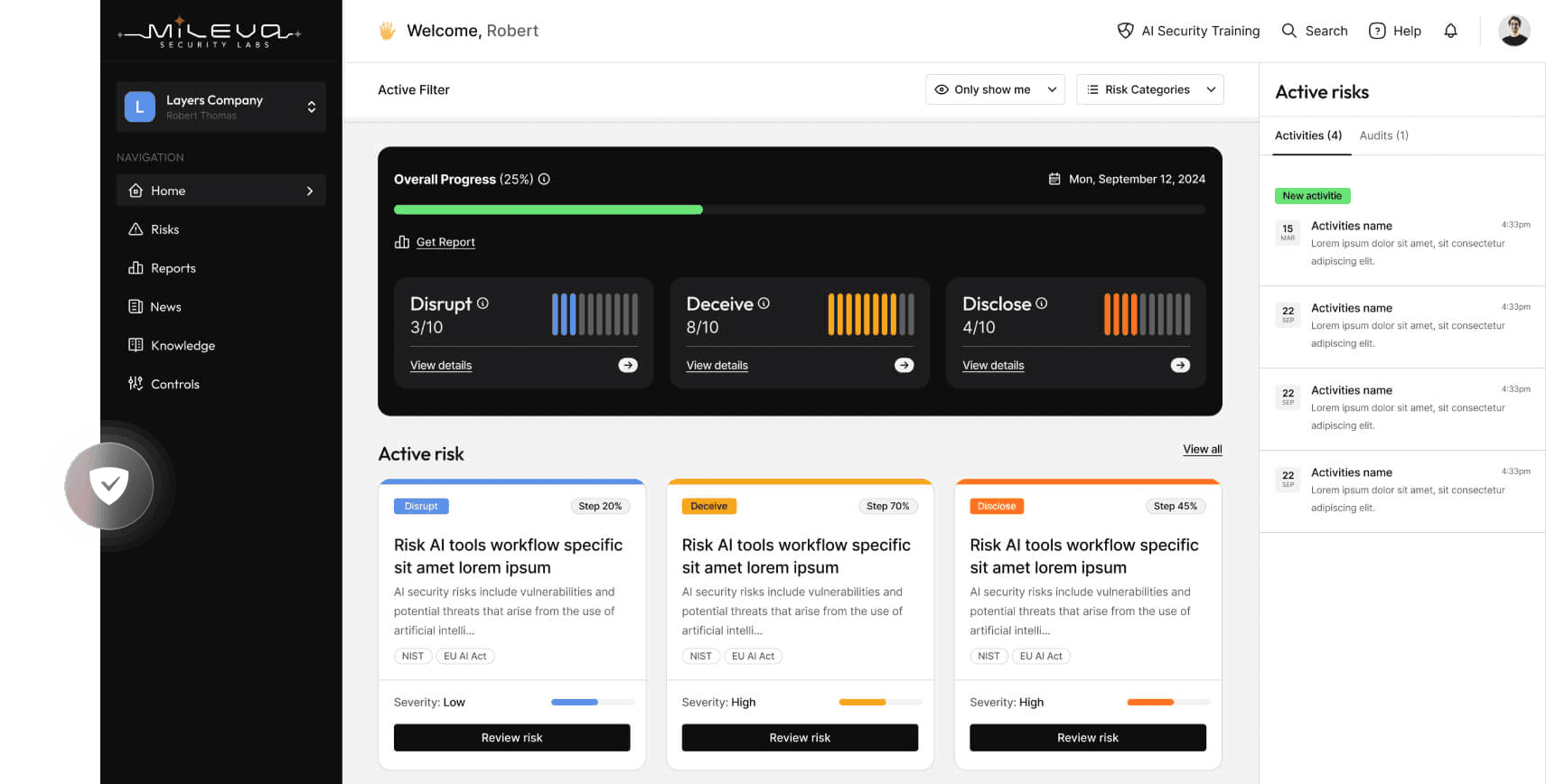

Welcome to the first edition of the Mileva Security Labs AI Security Fortnightly Digest!

The digest article contains individual reports that are labelled as follows:

[Vulnerability]: Notifications of vulnerabilities, including CVEs and other critical security flaws, complete with risk assessments and actionable mitigation steps.

[News]: Relevant AI-related news, accompanied by commentary on the broader implications.

[Research]: Summaries of peer-reviewed research from academia and industry, with relevance to AI security, information warfare, and cybersecurity. Limitations of the studies and key implications will also be highlighted.

Additionally, reports will feature theme tags such as [Safety] and [Cyber], providing context and categorisation to help you quickly navigate the topics that matter most.

We focus on external security threats to AI systems and models, however sometimes we may include other reports where relevant to the AI security community.

We are so grateful you have decided to be part of our AI security community, and we want to hear from you! Get in touch at contact@mileva.com.au with feedback, questions and comments.

Now.. onto the article!

[Research] Jan 13: Take-Aways From Microsoft’s Red-Teaming of 100 Generative AI Products

This is a summary of Microsoft’s red-team research findings from testing 100 generative AI products, published January 13, 2025. Further details can be found in their blog post (Microsoft Security Blog) and whitepaper (AIRT Lessons Whitepaper).

TLDR:

Microsoft Azure AI Red Team (AIRT) conducted red-teaming exercises on 100 generative AI products, uncovering common vulnerabilities and outlining fundamental considerations for building safer AI systems. (One more sentence about their findings found that x, y, z).

How it Works:

Red-teaming is a structured method for testing systems by simulating adversarial behaviours to uncover vulnerabilities. Microsoft’s Azure AI Red Team (AIRT) assessed 100 generative AI systems, including generative text, image, and code products. The team tested scenarios involving:

Security failures: Unauthorised data access, model extraction, and adversarial attacks, including prompt injections.

Safety failures: Biased, harmful, or unsafe outputs when AI systems interact with diverse inputs or contexts.

Misuse: Models used for unintended purposes, such as generating harmful content or automating misinformation campaigns.

Benign failures: Errors caused by unexpected but non-malicious user interactions, exposing gaps in system design or training, resulting in unpredictably or suboptimal results.

Their takeaways include:

To secure the AI, you must know the AI: The first step in securing AI is deeply understanding what the system is designed to do and where it is deployed. This includes anticipating how misuse or unintentional failures may arise.

Low-complexity attacks are highly successful: Many attacks do not require computing gradients or understanding a model’s architecture. Simple prompt engineering and trial-and-error methods can still yield harmful or unintended outputs.

Red-teaming alone is not enough assurance: Red-teaming is exploratory and focuses on identifying unforeseen vulnerabilities rather than assessing performance against predefined benchmarks.

Leverage automation: Automated tools and frameworks help scale red-teaming efforts, covering a broader risk landscape more efficiently.

Maintain human insight: While automation is critical, human creativity and contextual understanding are irreplaceable in detecting nuanced issues.

AI safety concerns are pervasive: Ethical concerns, including systemic bias and harmful outputs, remain difficult to quantify but must be addressed proactively.

LLMs increase attack surfaces: Large language models amplify existing vulnerabilities (e.g., phishing, misinformation) and introduce new challenges like prompt injection attacks and sensitive data leaks.

Security is dynamic: AI security is an ongoing process. As systems evolve and adversaries adapt, safeguards must continuously improve.

Implications:

Generative AI products are increasingly integral to business operations, yet they house consistently and easily exploitable vulnerabilities. Organisations should conduct red-teaming, penetration testing, or other kinds of adversarial vulnerability assessments against their AI systems, to identify weaknesses before they can be exploited in real-world scenarios.

AI developers and organisations bear increasing responsibility for the societal impact of their technologies. Ignoring safety concerns could lead to significant legal liabilities and reputational damage.

As organisations continue to race to integrate AI technologies into critical operations, security cannot be an afterthought. Traditional secure development practices and modern generative AI safeguards must be central to the development pipeline rather than reactive measures.

[Research] Jan 14: Adaptive Security Framework For LLMs by Lakera

This is a summary of research by Pfister et al., titled "Gandalf the Red: Adaptive Security for LLMs," released on January 14, 2025. The paper is accessible at: https://arxiv.org/pdf/2501.07927.

TLDR:

Pfister and colleagues from AI security company, Lakera, introduce D-SEC, a dynamic security utility threat model, alongside Gandalf, a crowd-sourced red-teaming platform. These tools aim to improve the security of large language model (LLM) applications against prompt attacks by balancing defence mechanisms with user utility.

How it Works:

Prompt attacks exploit vulnerabilities in LLM applications by manipulating input prompts to induce unintended behaviours. Traditional defences often fail to account for the adaptability of attackers and overly strict guardrails can inadvertently degrade the user experience. To address these challenges, the researchers propose two key innovations:

D-SEC (Dynamic Security Utility Threat Model): This model differentiates between attackers and legitimate users, modelling multi-step interactions to optimise the balance between security measures and user utility. By explicitly considering the dynamic behaviour of adversaries, D-SEC enables the development of defences that are both effective and user-friendly.

Gandalf Platform: A crowd-sourced, gamified red-teaming platform designed to generate realistic and adaptive attack examples. Gandalf allows for the collection of diverse prompt attacks, accumulating data to assess and improve the effectiveness of various defence strategies. The researchers have released a dataset of 279,000 prompt attacks collected via Gandalf to support further research in this area.

The research demonstrates that staged strategies like restricting application domains, implementing defence-in-depth, and adopting adaptive defences are effective in building secure and user-friendly LLM applications.

Implications:

The introduction of D-SEC and the Gandalf platform provides a structured approach to developing and evaluating security measures for LLM applications. By considering the dynamic nature of adversarial behaviour and the importance of user utility, these tools assist developers in creating applications that are both secure and user-centric. The publicly available dataset of prompt attacks serves as a valuable resource for the research community to further explore and address security challenges in LLMs.

The dataset released by Lakera can be found here: https://platform.lakera.ai/docs/datasets. If you want to test out your own prompt engineering skills and haven’t had a shot at playing Gandalf yourself yet, you should! The Mileva team have been big fans since its inception and we’ve even worked with them to create custom Gandalfs for special events.

[Research] Jan 16: Watermarking Framework Against Model Extraction Attacks

This is a summary of research by Xu et al., titled "Neural Honeytrace: A Robust Plug-and-Play Watermarking Framework Against Model Extraction Attacks," released on January 16, 2025. The paper is accessible at: https://arxiv.org/pdf/2501.09328.

TLDR:

Xu and colleagues introduce Neural Honeytrace, a novel watermarking framework designed to protect machine learning models from extraction attacks. Unlike traditional methods that require additional training and are vulnerable to adaptive attacks, Neural Honeytrace offers a training-free, plug-and-play solution that embeds watermarks into models. This approach provides the ability to assert ownership and detect unauthorised model usage with minimal overhead.

How it Works:

Model extraction attacks involve adversaries querying a machine learning model to reconstruct its functionality, and create an unauthorised copy often for private use, redistribution or exploitation. Traditional defences, such as triggerable watermarks, embed specific patterns into the model's outputs to claim ownership. However, these methods often require retraining the model, incurring computational costs, and may not withstand attacks aimed at removing or bypassing the watermark.

Neural Honeytrace addresses these limitations through two key innovations:

Similarity-Based Training-Free Watermarking: This technique allows for the embedding of watermarks without additional training. By analysing the model's decision boundaries, Neural Honeytrace identifies natural input patterns that can serve as watermarks. These patterns are selected based on their similarity to the model's existing data distribution, so that the watermarking process does not alter the model's performance.

Distribution-Based Multi-Step Watermark Information Transmission: Neural Honeytrace can employ a strategy that transmits watermark information over multiple steps. This approach disperses the watermark across various layers and components of the model, making it more challenging for adversaries to detect and remove the embedded watermark.

Experiments conducted on four datasets demonstrate that Neural Honeytrace outperforms previous methods in both efficiency and resilience to adaptive attacks. Notably, it reduces the average number of samples required to confidently assert ownership from 12,000 to 200, without incurring any training costs.

Implications:

Neural Honeytrace offers a practical and effective solution for model owners to protect their intellectual property in Machine Learning as a Service (MLaaS) platforms. By providing a training-free watermarking method, it lowers the technical barrier and cost for implementing security measures against model extraction attacks. This advancement is particularly beneficial for organisations lacking extensive computational resources to retrain models for IP security purposes.

Limitations:

While Neural Honeytrace shows significant improvements over existing methods, its effectiveness may vary depending on the complexity of the model and the nature of the data. The framework has been tested on highly specific datasets, and further research is needed to confirm its generalisability across diverse applications.

[Vulnerability] Jan 10: CVE-2024-12606: Unauthorised Modification of Data in AI Scribe WordPress Plugin

This is a summary of CVE-2024-12606, a vulnerability related to unauthorised modification of data in the AI Scribe WordPress plugin. More details can be found at: CVE-2024-12606.

TLDR:

The AI Scribe – SEO AI Writer, Content Generator, Humanizer, Blog Writer, SEO Optimizer, DALLE-3, AI WordPress Plugin ChatGPT (GPT-4o 128K) plugin for WordPress is vulnerable to unauthorized modification of data due to a missing capability check on the engine_request_data() function in all versions up to, and including, 2.3. This makes it possible for authenticated attackers, with Subscriber-level access and above, to update plugin settings. This could support entry to affect AI security threats.

Affected Resources

Plugin: AI Scribe – SEO AI Writer, Content Generator, Humanizer, Blog Writer, SEO Optimizer, DALLE-3, AI WordPress Plugin ChatGPT (GPT-4o 128K).

Versions: All versions up to and including 2.3.

Access Level: Exploitation requires authenticated access with Subscriber-level privileges or above.

Risk Rating:

Severity: Medium (estimated CVSS Base Score: 4.3).

Impact: Moderate on Integrity (unauthorised configuration changes).

Exploitability: Medium

Limitations: Requires authenticated access, limiting the scope of potential attackers.

Recommendations:

Update the AI Scribe plugin to a patched version (if available). Monitor the plugin's changelog or WordPress security advisories for the release of a fix.

If no timely patch is available, consider temporarily disabling the AI Scribe plugin or replacing it with a secure alternative.

[Vulnerability] Jan 11: CVE-2024-49785: Authenticated Cross-Site Scripting in IBM Watson

This is a summary of CVE-2024-49785, a cross-site scripting (XSS) vulnerability in IBM watsonx.ai. More details can be found at: CVE-2024-49785.

TLDR:

IBM watsonx.ai 1.1 through 2.0.3 and IBM watsonx.ai on Cloud Pak for Data 4.8 through 5.0.3 is vulnerable to cross-site scripting. This vulnerability allows an authenticated user to embed arbitrary JavaScript code in the Web UI thus altering the intended functionality potentially leading to credentials disclosure within a trusted session.

Affected Resources:

Product: IBM watsonx.ai

Versions:

IBM watsonx.ai: 1.1 through 2.0.3.

IBM watsonx.ai on Cloud Pak for Data: 4.8 through 5.0.3.

Access Level: Exploitation requires an authenticated user.

Risk Rating:

Severity: Medium (estimated CVSS Base Score: 5.4).

Impact: High on Confidentiality (credentials disclosure), Low on Integrity (minimal modification potential), and None on Availability.

Exploitability: Medium (low attack complexity but requires authenticated access).

Limitations: The attacker must have valid credentials and an active session to embed malicious JavaScript.

Recommendations:

Apply patches provided by IBM to affected versions of watsonx.ai and Cloud Pak for Data. Check IBM's support portal for the latest updates and advisories.

Configure a CSP to restrict the execution of unauthorised scripts and mitigate the impact of XSS attacks.

Implement input sanitisation measures to prevent malicious scripts from being injected into the Web UI.

If no timely patch is available, consider temporarily disabling the use of Watsonx.ai.

[News] [Defence] Jan 8: US Sanctions on Chinese AI Companies

This is a summary of the U.S. government's decision to blacklist additional Chinese AI and surveillance companies over alleged military ties and human rights concerns, announced on January 7, 2025

TLDR:

The United States has expanded its blacklist of Chinese tech firms, adding dozens of companies, including those specialising in AI, surveillance, and semiconductors. The move is part of an effort to counter China's alleged military-civil fusion strategy, which the U.S. claims links these companies to the Chinese military. Many of these firms develop or deploy AI technologies that are alleged to contribute to advanced surveillance systems, some of which are linked to mass surveillance and repression in regions like Xinjiang, specifically targeting minority groups.

The blacklist prohibits U.S. businesses from exporting critical technology and software to these companies, escalating tensions between the two nations and limiting Chinese access to fundamental components for AI and semiconductor development. The blacklisting reflects growing global ethical concerns about how AI is deployed, particularly in systems designed to control populations.

[News] [Safety] Jan 8: LLMs as Enablers of Research With Malicious Intent

This is a summary and discussion on the role AI played in the Cybertruck bombing in Las Vegas on January 8, 2025.

TLDR:

A man driving a Tesla Cybertruck intentionally detonated explosives in the vehicle near a government facility in Las Vegas. The attack caused significant damage and raises concerns about the accessibility and misuse of AI technologies. Investigations reveal that the perpetrator used ChatGPT to research and plan aspects of the attack. ChatGPT reportedly provided information on explosive recipes, where to acquire the materials, and tactical methods, helping the perpetrator refine his plans.

The perpetrator’s use of ChatGPT implicates LLMs as inadvertently assisting in malicious activities. Despite safeguards implemented in such models to restrict harmful outputs, the suspect was able to bypass these guardrails by crafting prompts to elicit their desired information. Though much of the information he acquired could be found through manual research, LLMs democratise the ability for individuals to access the information they require, in easily comprehensible and actionable formats, within seconds.

Companies offering AI services may face growing scrutiny over their responsibility for misuse. While LLM providers are often legally shielded from liability, public and regulatory pressures may lead to stricter accountability standards if more events like this occur. Additionally, policymakers may need to address how LLMs are governed.

[Research] Jan 5: Layer-AdvPatcher: Novel LLM Jailbreak Defence

This is a summary of research by Ouyang et al., "Layer-Level Self-Exposure and Patch: Affirmative Token Mitigation for Jailbreak Attack Defense", released January 5th 2025. The paper is accessible at: https://arxiv.org/pdf/2501.02629.

TLDR:

This research team has released a novel defence mechanism against jailbreak attacks on large language models. It uses a concept called LLM unlearning, which refers to methods for selectively erasing undesirable behaviours from a trained model without requiring complete retraining.

How it works:

Ouyang and colleagues released their research on “Layer-Level Self-Exposure and Patch: Affirmative Token Mitigation for Jailbreak Attack Defense”, a novel defence mechanism against jailbreak attacks on large language models (LLMs). Their solution, Layer-AdvPatcher, leverages the concept of LLM unlearning, which refers to methods for selectively erasing undesirable behaviours from a trained model without requiring complete retraining. Their approach focuses on identifying and mitigating a toxic region within the LLM—specific layers disproportionately prone to producing Affirmative Tokens like "Sure," "Certainly," or "Absolutely" when confronted with harmful prompts. These affirmative tokens often serve as the model’s implicit agreement to unsafe instructions, increasing the likelihood of harmful outputs.

Layer-AdvPatcher utilises self-augmented datasets, crafted by exploiting the vulnerabilities of the identified toxic region/layers. These datasets are then used to realign these layers via localised unlearning, effectively neutralising the tendency for affirmative token responses while preserving performance on benign queries. The pipeline for Layer-AdvPatcher is comprised of three critical steps:

Toxic Layer Locating: Hidden states across layers are decoded to identify layers with high probabilities of producing affirmative tokens. These toxic layers are disproportionately aligned with unsafe instruction-following behaviour.

Adversarial Augmentation: Using adversarial fine-tuning, the toxic layers are pushed to generate diverse harmful outputs. Perturbations are applied to standard datasets by replacing affirmative tokens in prompts to maximise vulnerability exposure, which expands the dataset for subsequent realignment.

Toxic Layer Editing: A layer-specific unlearning method updates the model’s initialisation parameters using the augmented training set, targeting exposed vulnerabilities while leaving non-toxic regions unaffected.

The team open-sourced the specialised datasets they generated from the toxic layers of models like Llama2-7B and Mistral-7B.

Implications:

Industries deploying LLMs in high-stakes environments, such as healthcare, legal, and customer service, can benefit from this technique by reducing the likelihood of generating harmful, unethical, or reputation-damaging content. The ability to target specific model layers rather than applying broad-spectrum defences offers increased precision and preserves operational integrity, minimising performance degradation on benign use cases.

Limitations:

While the results are promising, the researchers recognise limitations. First, Layer-AdvPatcher has been tested on mid-scale models (Llama2-7B, Mistral-7B) but not on larger-scale architectures (e.g., Llama3-13B). Scaling the approach may require significant computational resources and time. Additionally, while the framework demonstrates strong potential for modular adaptation, real-world implementation on highly complex models or production systems remains untested.

Further concerns include the ethical implications of open-sourcing the dataset derived from identified toxic layers. Although this supports research reproducibility, malicious actors could potentially exploit the dataset to craft more sophisticated jailbreak attacks or discover new vulnerabilities.

At Mileva we have noticed a trend in adversarial reproductions of academic attacks shortly following the release of new research papers. It is important your AI security team is aware of emerging research and proactively pushing mitigations.

[Research] Jan 5: Vulnerabilities and Licensing Risks in LLM Pre-Training Datasets

This is a summary of research by Jahanshahi and Mockus, "Cracks in The Stack: Hidden Vulnerabilities and Licensing Risks in LLM Pre-Training Datasets", released January 5th 2025. The paper is accessible at:https://arxiv.org/pdf/2501.02628.

TLDR:

Jahanshahi and Mockus, in their paper "Cracks in The Stack: Hidden Vulnerabilities and Licensing Risks in LLM Pre-Training Datasets", introduce an automated source code autocuration technique aimed at improving the quality of training data for Large Language Models (LLMs), without requiring tedious manual curation. Their method leverages the complete version histories of open-source software (OSS) projects to identify and filter out code samples that have been modified, particularly those addressing bugs or vulnerabilities. By analysing "the Stack" v2 open-source dataset, which comprises nearly 600 million code samples for training LLMs, they discovered that 17% of the code versions have newer iterations, with 17% of these updates representing bug fixes, including 2.36% addressing known Common Vulnerabilities and Exposures (CVEs). Additionally, they found that 58% of the code samples were never modified after creation, suggesting minimal or no use, and identified challenges related to misidentified code origins leading to the inclusion of non-permissively licensed code, raising compliance concerns.

Implications:

Models trained on outdated, buggy, or non-compliant code included in their pre-training datasets may inadvertently learn and propagate these insecure coding patterns. This is particularly concerning for industries relying on AI-driven code generation tools, as poor training data could compromise the security and integrity of outputted code. The inclusion of code with unclear or restrictive licensing could also result in legal and ethical issues over intellectual property disputes. Automated curation introduces a necessary level of quality control by attempting to remove code with questionable origins and quality.

Limitations

While the proposed autocuration technique offers a systematic approach to enhancing dataset quality, its effectiveness is contingent upon the accuracy and completeness of version histories in OSS projects. Projects with incomplete or poorly documented histories may not benefit fully from this method. Additionally, the approach may not account for vulnerabilities that were never formally addressed or documented, or unknown vulnerabilities, potentially leaving residual risks in the training dataset. As we say at Mileva, the safest kind of vulnerability is the one you know about.

[Research] [Cyber] Jan 7: CONTINUUM: Novel Detection For APT Attacks

This is a summary of research by Bahar et al., titled "CONTINUUM: Detecting APT Attacks through Spatial-Temporal Graph Neural Networks", released on January 7, 2025. The paper is accessible at: https://arxiv.org/pdf/2501.02981.

TLDR:

Bahar and colleagues present CONTINUUM, an Intrusion Detection System (IDS) designed to detect Advanced Persistent Threats (APTs) using a Spatio-Temporal Graph Neural Network (STGNN) Autoencoder. APTs are multi-stage cyber-attacks performed by skilled adversaries that traditional IDS often miss due to their low-profile nature. Provenance graphs, which track sequences of system events (e.g., file creation, process execution), are used to represent interactions between entities.

STGNNs, an extension of Graph Neural Networks (GNNs) are uniquely suited for analysing both the spatial relationships in these graphs and their temporal evolution, making them effective for tracking the staged and distributed nature of APTs. Graph encoders within CONTINUUM embed this information, enabling the system to model how malicious activity develops over time.

The system uses federated learning, where training occurs across multiple decentralised devices or servers without transferring raw data. This helps maintain data privacy, as local data stays on-site while encrypted model weights are shared. CONTINUUM uses homomorphic encryption, which allows computations on encrypted data without requiring decryption. Evaluation results indicate that CONTINUUM effectively detects APTs with lower false positive rates and optimised resource usage compared to existing methods.

Implications

By analysing spatial and temporal patterns, CONTINUUM can detect sophisticated attack behaviours that evade traditional IDS. Federated learning allows organisations to collaboratively train IDS models without exposing raw data, which is particularly relevant for environments with strict privacy requirements. Homomorphic encryption provides an additional layer of security, allowing organisations to share encrypted model updates without revealing sensitive information. This process is useful for sectors like finance and healthcare, where data privacy is critical.

Limitations

CONTINUUM’s reliance on provenance graphs and STGNNs makes it dependent on high-quality, graph-ready datasets that fully represent APT behaviours across multiple stages. However, current datasets for APT detection are often outdated, insufficiently documented, and represent only a few stages of APT attacks. These datasets are rarely formatted as graphs or temporal snapshots, requiring extensive pre-processing and conversion before they can be used effectively for GNN-based systems like CONTINUUM. Federated learning with homomorphic encryption also introduces computational overhead, potentially slowing down the system in environments with limited resources. As with any system, there is the potential for adversaries to pivot and develop new tactics that could evade detection.

[Research] [Blockchain] Jan 8: Consensus Protocol for Federated Learning Central Authority

This is a summary of research by Liu et al., titled "Proof-of-Data: A Consensus Protocol for Collaborative Intelligence", released on January 8, 2025. The paper is accessible at: https://arxiv.org/pdf/2501.02971.

TLDR:

Liu and colleagues introduce Proof-of-Data (PoD), a blockchain-based consensus protocol designed to facilitate decentralised federated learning without a central coordinator. Federated learning enables multiple participants to collaboratively train machine learning models while keeping their data localised, preserving data sovereignty and privacy. However, traditional federated learning often relies on a central authority to manage the process, which can be a single point of failure and may not be suitable for all collaborative scenarios.

PoD implements a decentralised framework that is Byzantine fault-tolerant, meaning it can tolerate up to one-third of participants acting maliciously, unpredictably, or incompetently without compromising the integrity of the collaborative model training. Byzantine faults refer to any participant behaving outside the protocol, either due to errors or deliberate sabotage. PoD separates model training from contribution accounting, combining the efficiency of asynchronous learning (where updates are made independently) with the finality of epoch-based voting, a mechanism that collects votes from participants over time to validate updates.

To prevent false reward claims through data forgery, PoD incorporates a privacy-aware data verification mechanism that assesses each participant's contribution, ensuring rewards are allocated fairly based on genuine input. After evaluation, PoD achieves model training performance comparable to centralised federated learning systems while maintaining trust and fairness in a decentralised environment.

Implications:

Decentralisation through the removal of a central coordinating entirety increases the resilience of systems by eliminating single points of failure and reducing the risk of centralised data breaches. By fairly allocating rewards based on verified contributions, PoD incentivises a broader range of participants, including smaller organisations and individuals, to contribute data. This democratisation of participation helps build more representative and robust models, addressing biases that can arise in less diverse training environments.

Limitations:

Though PoD addresses key challenges of decentralisation and Byzantine fault tolerance, its reliance on blockchain technology introduces scalability and performance constraints, especially in environments with many participants. Privacy-preserving data verification and consensus mechanisms require significant computational resources, which may affect efficiency. Additionally, the assumption that up to one-third of participants may act maliciously (Byzantine) may not hold in all real-world scenarios, potentially challenging the system’s performance in high-risk environments.

[Research] Jan 10: Data-Free Trojan Detection and Neutralisation in SSL Models

This is a summary of research by Liu et al., titled "TrojanDec: Data-free Detection of Trojan Inputs in Self-supervised Learning", released on January 10, 2025. The paper is accessible at: https://arxiv.org/pdf/2501.04108.

TLDR:

Liu and colleagues introduce TrojanDec, a framework to detect and neutralise malicious inputs—referred to as "trojans"—in self-supervised learning (SSL) models without requiring access to the original training data. In SSL, models learn patterns from unlabeled data to create features for downstream tasks, like image classification or text generation. Attackers can exploit this process by embedding hidden triggers into the model during training. When the model encounters inputs containing these triggers, it produces incorrect or adversarial outputs.

TrojanDec analyses how the model processes inputs, using masked versions of suspected inputs, selectively removing certain components or features, and comparing the model's behaviour on these variations. If a significant change in the model's output is observed, this suggests the presence of a trojan. Once detected, TrojanDec employs diffusion-based restoration, a method that incrementally replaces corrupted parts of the input with reconstructed values to "fix" the input and remove the malicious trigger.

Evaluations show TrojanDec consistently detects trojaned inputs and restores them to a clean state, outperforming existing methods in both detection rates and accuracy of restored inputs.

Implications:

TrojanDec offers a way to improve the security of SSL models by providing a reliable method to detect and mitigate trojan attacks without needing access to the original training data. This capability is useful for users who utilise pre-trained models from external sources, oftwen without knowledge of their training process or data quality. TrojanDec offers a way to validate and clean inputs during operation, safeguarding these models from manipulation.

Limitations:

While TrojanDec shows promising results, its effectiveness may vary depending on the complexity of the trojan attack and the architecture of the SSL model. Additionally, the method's performance in real-world scenarios with diverse data distributions requires further investigation. The computational overhead associated with analysing and restoring inputs could also impact the efficiency of the system, particularly in resource-constrained environments. Additionally, while TrojanDec can remediate inputs, it does not prevent trojan injection during the model training phase, which remains a separate challenge.

[Research] Jan 10: Enhancing Backdoor Attack Stealthiness in Model Parameters

This is a summary of research by Xu et al., titled "Towards Backdoor Stealthiness in Model Parameter Space," released on January 10, 2025. The paper is accessible at: https://arxiv.org/pdf/2501.05928.

TLDR:

Xu and Colleagues identify a vulnerability in current backdoor attacks, which, despite being stealthy in input and feature spaces, can be detected through model parameter analysis. They introduce "Grond," a novel attack method employing Adversarial Backdoor Injection (ABI) to enhance stealthiness across input, feature, and parameter spaces. This development challenges current defences by making backdoor attacks harder to identify without compromising the model's benign functionality.

How it Works

The study investigates how backdoor attacks exploit different “spaces” of a machine learning model's operations. Backdoor attacks are often designed to be imperceptible in the input space, which consists of the raw data provided to the model, such as images or text. They may also disguise malicious behaviours within the feature space, where the model processes internal representations of the input data. However, even when attacks remain hidden in these two spaces, they often leave detectable patterns in the parameter space, which includes the learned weights and biases that define the model’s behaviour.

Grond, the proposed attack method, addresses weaknesses in backdoor attacks by focusing on parameter space. Specifically, it introduces Adversarial Backdoor Injection (ABI), a technique designed to mask the changes made to parameters during the backdoor embedding process. Unlike traditional approaches that leave detectable parameter traces, ABI uses adversarial optimisation to make changes subtle enough to evade detection. Adversarial optimisation systematically modifies the parameters to minimise the statistical difference between a clean model and a backdoored one, all while embedding the malicious behaviour effectively.

Grond operates in three steps:

The attack begins by creating adversarial examples, which are subtle modifications of inputs designed to trigger the backdoor. These examples are carefully crafted so that the malicious behaviour only activates under specific conditions while remaining harmless in other cases.

These adversarial examples are used to fine-tune the model. During this process, the backdoor is embedded by altering the model’s parameters. However, ABI it used to make changes that remain minimal and aligned with the parameter distribution of a clean model to avoid detection.

Finally, the parameters of the backdoored model are iteratively adjusted using statistical techniques to align closely with those of a clean model. This step refines the backdoor’s embedding, hiding it deep within parameter space while preserving the model’s performance on normal tasks.

Experiments tested Grond against 12 existing backdoor methods and 17 defensive strategies, showing its capacity to evade detection across multiple spaces while retaining high performance on benign tasks.

Implications:

Grond's stealthiness poses significant security challenges. By attacking all three spaces with particular attention to parameters, it bypasses many current defence mechanisms designed for input or feature-based detection. More robust detection methods must be developed that consider parameter space anomalies, ensuring the integrity of machine learning models in critical applications.

Limitations:

Grond has primarily been validated on small to mid-sized models, such as ResNet-18 and BERT-base, which limits insights into its behaviour on larger architectures. Additionally, the computational overhead for fine-tuning parameters using ABI can be substantial. The researchers also acknowledge ethical risks associated with open-sourcing their attack framework, as it could be exploited by malicious actors to bypass defences or develop similar techniques.

AI security teams should be aware of the evolving sophistication in attack methodologies, as exemplified by Grond. Proactive monitoring and adaptation to new, advanced threats are essential to protect AI systems against covert manipulations.

[Research] Jan 11: Method for Detecting Unauthorised Use of Images in AI Model Training

This is a summary of research by Bohacek and Farid, titled "Has an AI model been trained on your images?", released on January 11, 2025. The paper is accessible at: https://arxiv.org/pdf/2501.06399.

TLDR:

Bohacek and Farid introduce a method to determine if specific images have been used in the training of generative AI models. Their computationally efficient technique operates without prior knowledge of the model's architecture or weights, enabling creators to audit models for unauthorised use of their intellectual property.

How it Works:

Generative AI models are trained on vast datasets, often scraping images from the internet without creators' consent. This practice raises concerns about copyright infringement and fair use. The proposed method allows creators to verify if their images were part of a model's training data through a process known as black-box membership inference, which assesses whether specific data points were included in a model's training set without requiring access to the model's internal parameters.

The method involves:

Querying the Model: The suspect model is prompted to generate images based on descriptions related to the creator's work.

Comparing Outputs: The generated images are then compared to the creator's original images using similarity metrics such as perceptual distance measures. These metrics are designed to detect subtle visual similarities that indicate the model has seen and learned from the original images.

Assessing Membership: f the similarity scores between the generated images and the original ones exceed a statistically significant threshold, it suggests that the creator's images were part of the training dataset.

This approach does not require access to the model's internal architecture or weights, making it applicable to a wide range of generative AI models.

Implications:

This method provides creators with a way to audit AI models for potential misuse of their images, supporting efforts to protect intellectual property rights. It also contributes to the growing discussion on ethical AI development and the need for transparency in training data collection and usage.

Limitations:

The effectiveness of this method may vary depending on the complexity of the model and the diversity of its training data. Additionally, Generative models can produce outputs that resemble known styles or patterns without having directly seen specific images, especially if they’ve been trained on large and diverse datasets. This method will be most effective for detecting clear cases where outputs bear a striking resemblance to unique or recognisable elements of a work. For generic styles or themes, the method’s reliability diminishes.Thus, high similarity scores between generated outputs and a creator’s work don’t guarantee their images were used in training.

Further research is needed to assess its applicability across different types of generative AI models and to refine its accuracy in detecting unauthorised use of images.

If you’re concerned about the unauthorised use of your data in AI model training, this method can help you identify whether your IP has been misused. Where possible, check the data collection and usage policies of organisations creating generative AI models, and websites where you post your work - some allow you to opt out of having your content included in their datasets. Additionally, consider watermarking your images in ways that make them harder to use in training data while maintaining their appearance online.

[Research] [Cyber] Jan 12: Using GenAI in Penetration Testing

This is a summary of research by López Martínez et al., titled "Generative Artificial Intelligence-Supported Pentesting: A Comparison between Claude Opus, GPT-4, and Copilot," released on January 12, 2025. The paper is accessible at: https://arxiv.org/pdf/2501.06963.

TLDR:

López Martínez and colleagues assess the capabilities of leading generative AI tools—Claude Opus, GPT-4, and Copilot—in supporting the penetration testing (pen-testing) process as outlined by the Penetration Testing Execution Standard (PTES). Their findings indicate that while these tools cannot fully automate pen-testing, they significantly enhance efficiency and effectiveness in specific tasks, with Claude Opus demonstrating superior performance in the evaluated scenarios.

How it Works:

Penetration testing involves simulating cyber attacks on systems to identify vulnerabilities. The Penetration Testing Execution Standard (PTES) provides a framework for conducting these assessments across phases such as pre-engagement interactions, intelligence gathering threat modeling, vulnerability analysis, exploitation, post-exploitation, and reporting.

In this study, the researchers evaluated three generative AI tools—Claude Opus, GPT-4 (via ChatGPT), and Copilot—across all PTES phases within a controlled virtualised environment. Each tool was tasked with supporting core activities for each phase, such as generating reconnaissance strategies, identifying potential vulnerabilities, suggesting exploitation techniques, and drafting reports.

The findings revealed that all three tools contributed positively to the pen-testing process, offering valuable insights and automating routine tasks. Notably, Claude Opus consistently outperformed GPT-4 and Copilot in generating contextually appropriate and precise outputs.

Implications:

By automating certain aspects of the PTES framework, these tools can assist ethical hackers in automatable aspects of the pen-testing process. They allow professionals to focus on more complex analysis and decision-making, potentially speeding up the overall workflow. However, AI tools should not be solely relied upon, as human expertise remains critical for interpreting results, understanding specific client objectives, and assessing the business context.

Limitations:

The AI tools evaluated are subject to inherent limitations, such as outdated training data, lack of contextual understanding, potential biases and hallucinations. These issues can impact the relevance and accuracy of their outputs. Additionally, the tools cannot fully replace the expertise of human pen-testers, particularly in validating AI-generated suggestions, performing manual security testing, and tailoring recommendations to a client's unique business context.

AI-assisted pen-testing should not be a substitute for engaging qualified security professionals. Non-security practitioners, or junior penetration testers, attempting to perform or accelerate tests using these tools may overlook critical security risks, misinterpret results, or introduce other errors into the process.