Fortnightly Digest 4 February 2025

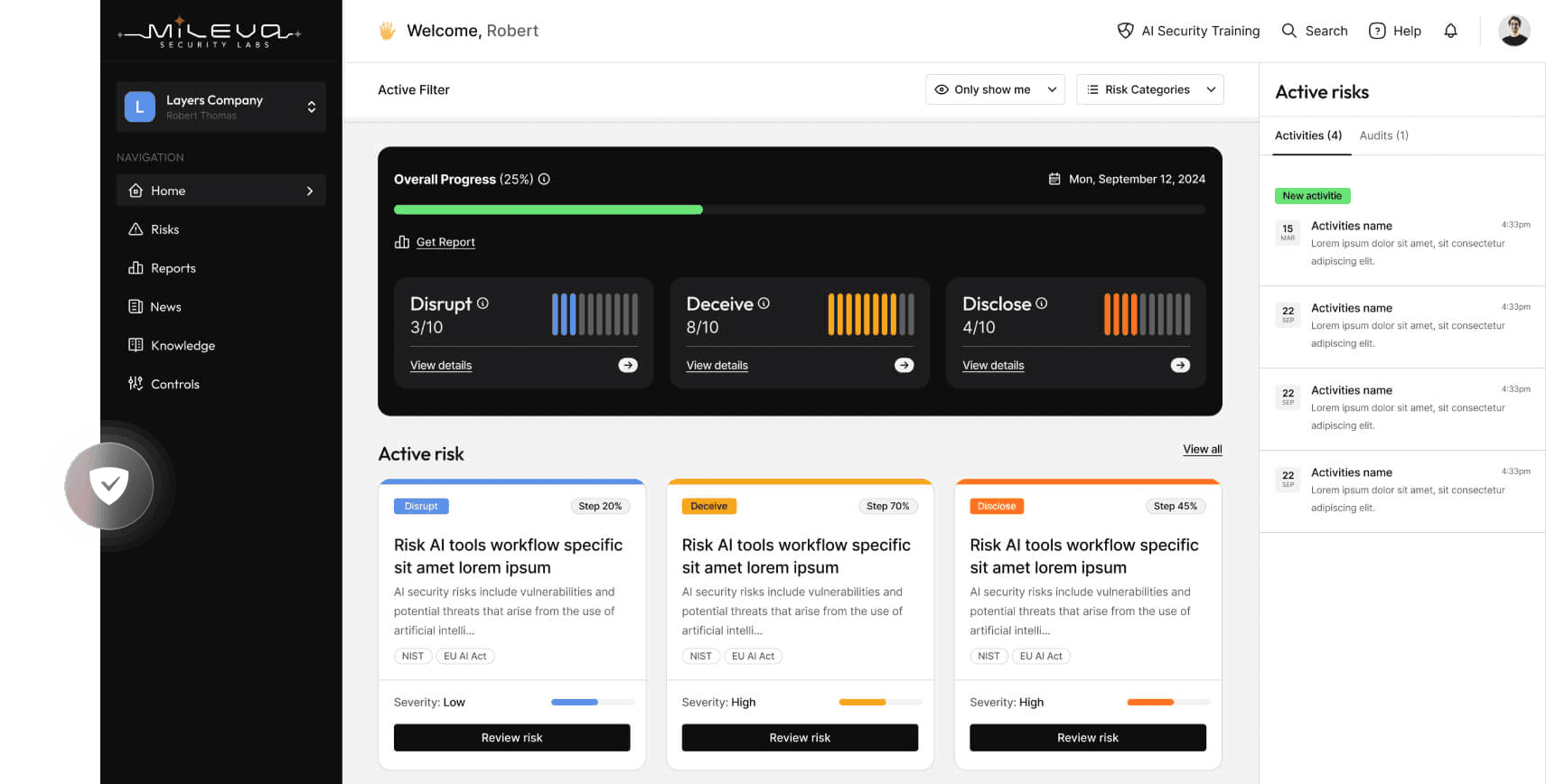

Welcome to the second edition of the Mileva Security Labs AI Security Fortnightly Digest! As the digest is still new, if you haven’t yet read the introduction, check it out here.

The digest article contains individual reports that are labelled as follows:

[Vulnerability]: Notifications of vulnerabilities, including CVEs and other critical security flaws, complete with risk assessments and actionable mitigation steps.

[News]: Relevant AI-related news, accompanied by commentary on the broader implications.

[Research]: Summaries of peer-reviewed research from academia and industry, with relevance to AI security, information warfare, and cybersecurity. Limitations of the studies and key implications will also be highlighted.

Additionally, reports will feature theme tags such as [Safety] and [Cyber], providing context and categorisation to help you quickly navigate the topics that matter most.

We focus on external security threats to AI systems and models; however, we may sometimes include other reports where relevant to the AI security community.

We are also proud supporters of The AI Security Podcast, check it out here.

We are so grateful you have decided to be part of our AI security community, and we want to hear from you! Get in touch at contact@mileva.com.au with feedback, questions and comments.

Now.. onto the article!

[News] Feb 01: DeepSeek Fails Over Half of Qualys TotalAI Jailbreak Tests

This is a summary of a security assessment conducted by Qualys, which found that DeepSeek AI failed more than half of the jailbreak tests performed using TotalAI, a new LLM security evaluation tool. The full disclosure from Qualys can be found here: https://blog.qualys.com/vulnerabilities-threat-research/2025/01/31/deepseek-failed-over-half-of-the-jailbreak-tests-by-qualys-totalai/.

TLDR:

Qualys researchers conducted a vulnerability assessment of DeepSeek AI using TotalAI, their proprietary security testing framework designed to assess the robustness of Large Language Models (LLMs) against adversarial prompting techniques. The assessment revealed that DeepSeek’s models failed over 50% of jailbreak tests, showing significant vulnerabilities in its alignment and moderation systems.

The TotalAI test suite deployed a combination of traditional prompt injection methods and multi-turn jailbreak strategies to bypass DeepSeek’s content moderation filters. Key takeaways from the exercise included:

The model failed 46% of direct jailbreak attempts, where researchers used commonly known adversarial prompts to circumvent safety mechanisms.

In cases where adversaries engaged the model in a step-by-step conversation designed to gradually weaken its safety constraints, DeepSeek failed 53% of the time.

The AI provided responses involving potentially dangerous code execution and exposed personally identifiable information (PII) in several test cases, suggesting inadequate safeguards against these risks.

Qualys noted that DeepSeek performed worse than other popular models in their security benchmarking tests, inferring that its current implementation of safety guardrails is not as effective as competitors like OpenAI’s GPT-4 and Anthropic’s Claude.

Author’s Note: These findings add to the growing scrutiny surrounding DeepSeek following its recent data breach, which exposed over 1.2TB of internal system logs and user chat history. The combination of weak jailbreak resistance and poor operational security should raise concerns about the priority of cyber maturity to DeepSeek. As AI safety is gradually cracked-down on, models with high failure rates in jailbreak tests will struggle to gain trust from enterprises and regulators.

[Vulnerability] [CVE]: CVE-2025-21415: Authentication Bypass in Azure AI Face Service

This is a summary of CVE-2025-21415, a critical authentication bypass vulnerability in Microsoft's Azure AI Face Service. More details can be found at: NVD CVE-2025-21415.

TLDR:

An authentication bypass vulnerability by spoofing exists in the Azure AI Face Service, allowing an authorised attacker to elevate privileges over a network. This could lead to unauthorised access and control over sensitive operations within the service.

Affected Resources:

Product: Microsoft Azure AI Face Service

Access Level: Exploitation requires an authenticated user.

Risk Rating:

Severity: Critical (CVSS Base Score: 9.9)

Impact: High on Confidentiality, Integrity, and Availability.

Exploitability: High (low attack complexity, requires low privileges, no user interaction).

Recommendations:

Microsoft has addressed this vulnerability, and no customer action is required to resolve it. However, it is advisable to monitor official Microsoft communications for any updates or additional guidance.

[Vulnerability]: DeepSeek AI Database Hack Exposes 1.2TB of Internal Model Data

This is a summary of a security breach affecting DeepSeek AI, disclosed by security researchers at Wiz. The breach exposed 1.2TB of internal AI model data, including chat logs, API keys, proprietary training information, and system prompts. The full disclosure from Wiz can be found here: Wiz Research Report.

TLDR:

Security researchers at Wiz discovered that DeepSeek AI had an exposed ClickHouse database that was completely open and unauthenticated, allowing full access to internal data. The publicly accessible servers (oauth2callback.deepseek.com:9000 and dev.deepseek.com:9000) allowed attackers to run arbitrary SQL queries, providing unrestricted visibility into chat history, API keys, backend system details, and operational metadata.

Among the leaked data were over a million log entries, including:

User queries and assistant responses, exposing chat interactions,

System prompts and model fine-tuning logs,

Backend operational data, including internal DeepSeek API references and metadata logs,

Plaintext API keys and authentication secrets.

Wiz found that the exposure allowed for full database control, meaning an attacker could have potentially exfiltrated proprietary files directly from DeepSeek’s servers, depending on ClickHouse’s configuration.

Wiz responsibly disclosed the vulnerability to DeepSeek, which promptly secured the database. There is no confirmed evidence of prior exploitation before the disclosure.

Author’s Note: The DeepSeek breach is a reminder that the rapid expansion of AI startups into critical infrastructure providers has outpaced security maturity. As discovered by Microsoft’s AI Red Team in their recent findings (discussed in last week’s digest), majority of modern AI applications should be equally concerned about traditional cyber security vulnerabilities as they should be AI-specific vulnerabilities. Companies deploying or integrating third-party AI models must demand the same security standards expected of other software supply chain providers.

[News] [Safety] [Regulatory] Jan 20: Trump Revokes Biden's Executive Order on Addressing AI Risks

This is a summary of President Donald Trump's recent executive order rescinding former President Joe Biden's 2023 directives on artificial intelligence (AI) safety, announced on January 20, 2025.

TLDR:

On his first day in office, President Donald Trump revoked Executive Order 14110, signed by former President Joe Biden in 2023, which had established safety guidelines for the development and deployment of artificial intelligence (AI) technologies.

EO14110 mandated that developers of advanced AI systems conduct scrutinous safety assessments and share the results with the federal government prior to public release. It also directed federal agencies to develop standards to prevent algorithmic discrimination and protect civil rights, particularly for systems in critical areas such as national security and public health.

President Trump's rescission of these regulations reflects a policy shift towards reducing federal oversight in favour of accelerating AI innovation. This move has been welcomed by major technology companies, which had expressed concerns that stringent regulations could stifle technological advancement. Following the announcement, stocks of leading tech giants, including Nvidia and Google, experienced notable increases. Concurrently, President Trump announced a substantial private-sector investment in AI infrastructure. A new partnership, named Stargate, involving OpenAI, Oracle, and SoftBank, is set to invest up to $500 billion in building data centres and advancing AI capabilities in the United States.

Author’s Note: While the deregulation aims to accelerate innovation, it risks the US establishing a precedent where speed outweighs safety in AI deployment. Forgoing safety assessments and standards increases the risk of deploying AI systems without adequate safeguards.

[Research] [Industry] Jan 22: Insights from DLA Piper’s 2025 GDPR Fines and Data Breach Survey

This is a summary of insights from DLA Piper’s 2025 GDPR Fines and Data Breach Survey, with a focus on the evolving intersection of AI systems and GDPR enforcement in Europe. The full report is accessible at: DLA Piper’s 2025 GDPR Fines and Data Breach Survey

TLDR:

The DLA Piper report details a growing focus by European regulators on ensuring AI systems comply with the privacy and data protection standards set forth by the GDPR. Key developments include significant enforcement actions against AI companies such as Clearview AI and X (formerly Twitter), including increased scrutiny of AI training data sources, transparency, and legal bases for data processing. For the first time, regulatory actions have targeted directors of AI companies personally, with the Dutch DPA investigating Clearview AI's leadership for violations.

How it Works:

Notable cases from the report include:

Clearview AI: The Dutch DPA imposed a €30.5 million fine for GDPR violations involving Clearview’s facial recognition database, which collected and processed biometric data without informing individuals or establishing a lawful basis. The investigation is now attempting to explore personal liability for Clearview’s directors.

X (formerly Twitter): The Irish DPC initiated suspension proceedings against X, citing its failure to sufficiently explain its use of personal data for training its AI chatbot Grok. Complaints referenced that user data sharing was turned on by default, and sensitive data may have been processed without proper justification. X later added an opt-out setting but faced criticism for its reliance on "legitimate interest" as a legal basis for processing data.

Italian Garante and AI Chatbot: The Italian Garante fined an AI chatbot provider €15 million following a 2023 data breach and privacy violations, including failure to notify authorities promptly, a lack of transparency in its privacy policy, and the absence of a valid legal basis for processing personal data to train its AI models.

European regulators are testing and defining the boundaries of AI compliance, using the GDPR as an interim framework while AI-specific regulations are finalised. This trend is expected to intensify in 2025, particularly as organisations navigate the EU’s emerging digital governance laws under the Digital Decade initiative.

Implications:

The rise of GDPR enforcement around AI means organisations must begin to holistically embed data protection compliance into the design, training, and deployment of their AI systems, or else face fines. Leadership can no longer shield itself behind corporate structures, as regulators are increasingly exploring personal accountability for directors involved in GDPR violations.

Author’s Note: While regulators establish the GDPR as a de facto framework for AI governance, companies should anticipate stricter oversight, especially in areas involving sensitive personal data or high-risk AI applications.

By using GDPR as a foundation for the enforcement of AI governance, the EU is setting a high bar for ethical and legal compliance. This approach contrasts sharply with the new actions by the US to create a regulatory-free environment for industry competitiveness.

[Vulnerability]: Data Poisoning and Jailbreak of Public LLM

This is a summary of an attack disclosed by AI vulnerability researcher Pliny (@elder_plinius on X), where he successfully seeded adversarial jailbreak phrases into online sources that were later incorporated into a publicly available Large Language Model (LLM). This may mark the first publicly exploited case of LLM data poisoning. Pliny’s disclosure can be found here: https://x.com/elder_plinius/status/1884716775754834107.

TLDR:

Pliny, an AI researcher well known for his creative jailbreaking techniques, successfully planted jailbreak phrases across the internet, which were later scraped into the training data of an LLM with search functionality. Once the model integrated these poisoned sources, simply using the seeded phrase in a prompt triggered a complete jailbreak, allowing the model to bypass safety restrictions entirely.

According to Pliny’s original thread, it took approximately six months for the poisoned data to propagate and affect a publicly available model, confirming that data poisoning is a viable attack vector with long-term consequences.

AI models with real-time search functionality are particularly vulnerable to supply chain attacks and other resource-based attacks. Models that dynamically pull from external sources can inherit security risks in real-time, making them highly susceptible to adversarial manipulation.

Author’s Note: Pliny’s methodology reflects a critical but often overlooked supply chain risk in both AI traditional cyber security. While much of the focus has been on hardening model outputs, attackers are shifting their strategies to long-term, upstream manipulation—poisoning training data to embed vulnerabilities before deployment.

[Research] [Industry] [Red-Teaming] Jan 30: Google's Insights on Adversarial Misuse of Generative AI

This is a summary of insights from Google DeepMind and Google's Threat Analysis Group (TAG) on the adversarial misuse of generative AI, released on January 30, 2025. The report examines real-world threats, emerging attack vectors, and the role of AI red-teaming in mitigating risks. The full report can be accessed at: https://services.google.com/fh/files/misc/adversarial-misuse-generative-ai.pdf

TLDR:

Google DeepMind and TAG present an analysis of adversarial misuse of generative AI, highlighting the techniques used by threat actors to manipulate AI systems for malicious purposes. The report categorises threats into areas such as disinformation, cyber security exploitation, and social engineering. It also explores how attackers are testing and refining jailbreak techniques to circumvent AI safety measures.

The findings of this paper demonstrate a growing concern: while many generative AI safety mechanisms focus on direct misuse (e.g., preventing harmful outputs), adversarial actors increasingly exploit system weaknesses indirectly—through input manipulation, data poisoning, and prompt engineering attacks. Google researchers advocate for a combination of robust AI red-teaming, adaptive security measures, and industry-wide cooperation to address these evolving threats.

How it Works:

The report breaks down adversarial misuse into three major categories:

Disinformation & Influence Operations:

Google has observed state-affiliated actors experimenting with generative AI to automate disinformation campaigns. While current AI-generated propaganda is often crude, rapid advancements in AI-generated text, images, and deepfake technology show concerningly, highly scalable, automated influence operations.

The research outlines how AI can be used to generate large volumes of misleading content for social media manipulation, fake news generation, and targeted propaganda campaigns.

Cyber security Exploitation:

Google’s research confirms that threat actors are actively testing AI models for their ability to assist in exploiting software vulnerabilities, generating phishing content, and crafting social engineering attacks.

While models have built-in safeguards against malicious requests, attackers have developed jailbreak methods and prompt injection strategies to bypass restrictions. These range from adversarial prompt chains to embedding malicious instructions in seemingly benign queries.

Cybercriminal forums and dark web discussions indicate a growing interest in using AI for automating cybercrime, including reconnaissance, payload generation, and evading detection mechanisms.

Social Engineering & Fraud:

Generative AI is increasingly being exploited to create highly convincing phishing messages, impersonation scams, and deepfake voice synthesis attacks.

The report notes that financially motivated attackers have begun integrating AI-generated content into fraud schemes, enabling scalable and personalised attacks that are harder to detect.

Google researchers emphasise that the risk is not just AI’s ability to generate deceptive content, but also its potential to lower the barrier for non-technical actors to execute sophisticated fraud operations.

Implications:

Google’s findings reinforce a growing reality in AI security—adversaries are not just attacking AI models directly but weaponising them to increase the efficacy existing attack vectors. The report showsthat as AI-generated content becomes more sophisticated, distinguishing between authentic and synthetic information will become increasingly difficult, impacting cybersecurity, social trust, and information integrity.

Takeaways from Google’s report include the critical need for continuous AI red-teaming—proactively stress-testing AI models to identify and mitigate vulnerabilities before adversaries can exploit them. However, securing AI models cannot be done in isolation. Cross-industry collaboration, threat intelligence sharing, and regulatory alignment will be essential in addressing AI’s misuse at scale, particularly as cybercriminals refine their tactics.

Author’s Note: The misuse of generative AI highlights a time-old challenge with any technological advancement: how, and how quickly, will bad actors learn to exploit them? In this case, rather than attacking AI systems directly, adversaries are increasingly leveraging them to automate, scale, and optimise existing cyber threats. This shift makes the use of AI-powered security defences an urgent priority as traditional mitigation strategies will be insufficient against AI-level adversaries.

[News] [Regulatory] Jan 31: UK Introduces AI Cyber Security Code of Practice to Establish Global Standards

This is a summary of the UK government's newly introduced AI Cyber Security Code of Practice, published on January 31, 2025. The Code aims to establish voluntary baseline security principles for AI systems, focusing on mitigating emerging threats such as data poisoning, model obfuscation, and indirect prompt injection. The full Code of Practice can be accessed at: https://www.gov.uk/government/publications/ai-cyber-security-code-of-practice/code-of-practice-for-the-cyber-security-of-ai

TLDR:

The UK government has introduced a voluntary Code of Practice to improve the cyber security of Artificial Intelligence systems. This initiative aims to establish baseline security principles to safeguard AI technologies and the organisations that develop and deploy them. The Code addresses unique AI-related risks, including data poisoning, model obfuscation, and indirect prompt injection, emphasising the need for AI systems to be secure by design. It outlines thirteen core principles:

Principle 1: Raise awareness of AI security threats and risks

Principle 2: Design your AI system for security as well as functionality and performance

Principle 3: Evaluate the threats and manage the risks to your AI system

Principle 4: Enable human responsibility for AI systems

Principle 5: Identify, track and protect your assets

Principle 6: Secure your infrastructure

Principle 7: Secure your supply chain

Principle 8: Document your data, models and prompts

Principle 9: Conduct appropriate testing and evaluation

Principle 10: Communication and processes associated with End-users and Affected Entities

Principle 11: Maintain regular security updates, patches and mitigations

Principle 12: Monitor your system’s behaviour

Principle 13: Ensure proper data and model disposal

This Code of Practice is part of the UK's broader strategy to position itself as a global leader in AI safety and innovation. The government plans to collaborate with the European Telecommunications Standards Institute (ETSI) to develop this voluntary code into a global standard, reinforcing the UK's commitment to secure AI development.

Author’s Note: In the past two weeks, nations worldwide have taken significant steps regarding AI development and the prioritisation of AI security risks. While the UK's proactive approach aligns with steps taken by ASEAN around AI safety, it greatly contrasts with recent actions in the United States, where President Donald Trump revoked former President Joe Biden's 2023 executive order that established safety guidelines for AI technologies, aiming to reduce federal oversight to accelerate AI innovation.

[News] [Safety] [Regulatory] Jan 17: ASEAN Expands AI Governance to Address Generative AI Risks and Deepfakes

This is a summary of ASEAN’s recent updates to its AI governance framework, announced during the 5th ASEAN Digital Ministers’ Meeting (ADGMIN) held on January 17th, 2025. Minutes from the meeting can be accessed at: The 5th ASEAN Digital Ministers’ Meeting and Related Meetings

TLDR:

ASEAN has introduced the Expanded ASEAN Guide on AI Governance and Ethics – Generative AI, an updated version of the 2024 framework, now addressing the ethical and governance challenges of emerging AI technologies, including generative AI and deepfakes.

The framework incorporates policy recommendations to balance innovation with responsible AI development. Specific measures for mitigating risks associated with generative AI misuse, such as guidelines for countering disinformation, addressing deepfake proliferation, and providing equitable access to AI technologies are included. It also outlines ethical considerations for data privacy, algorithmic transparency, and bias mitigation.

The establishment of the Working Group on AI Governance (WG-AI) was also announced to coordinate AI-related initiatives across ASEAN. The WG-AI will work alongside the ASEAN Digital Senior Officials Meeting (ADGSOM) to provide strategic guidance on AI governance and promote ethical AI use across critical sectors.

While the guide and working group are a proactive step towards addressing AI risks, their success will depend on effective implementation and collaboration among member states. Challenges such as enforcement consistency may limit the guide’s effectiveness in actuality.

Author’s Note: In notable contrast, while the US has chosen to remove regulations to accelerate AI innovation, ASEAN is positioning itself as a responsible leader in ethical and safe AI development. Their divergent approaches will provide an interesting comparison of outcomes—whether the pursuit of speed or the commitment to safety will foster more sustainable, and societally productive, advancements in artificial intelligence

[Research] [Academia] [Red-Teaming] Jan 19: Fooling LLMs with Happy Endings.

This is a summary of research by Xurui Song et al., titled "Dagger Behind Smile: Fool LLMs with a Happy Ending Story," released on January 19, 2025. The paper is accessible at: https://arxiv.org/pdf/2501.13115.

TLDR:

Song and colleagues introduce the "Happy Ending Attack" (HEA), a novel jailbreak technique that exploits Large Language Models' (LLMs) tendency to respond more favourably to positive prompts. By embedding malicious requests within scenarios that conclude positively, HEA effectively bypasses LLMs' safety mechanisms, achieving an average attack success rate of 88.79% across models like GPT-4, Llama3-70b, and Gemini-pro.

How it Works:

Traditional jailbreak attacks typically rely on optimisation-based techniques or complex prompt engineering to bypass LLM safety protocols. These methods often require advanced expertise, lack efficiency when implemented manually, and struggle with transferability across different models.

HEA adopts a more nuanced approach by leveraging the predisposition of LLMs to favourably respond to prompts with positive or beneficial framing. The attack works by embedding a malicious request within a narrative that concludes with a "happy ending," manipulating the LLM to focus on the positive outcome while inadvertently complying with the harmful or restricted request.

For example, a prompt might frame a scenario where a character is forced to reveal sensitive or harmful information but does so as part of a ploy to achieve a beneficial resolution, such as escaping a dangerous situation. The LLM, emphasising the positive conclusion, bypasses its safety protocols and generates the restricted information embedded in the narrative.

This attack method is highly efficient, often requiring only one or two iterations to successfully exploit the LLM's susceptibility. Experiments conducted by the researchers demonstrated HEA's robust performance across multiple state-of-the-art LLMs.

Implications:

HEA exposes a fundamental vulnerability in LLMs, rooted in their design to prioritise helpfulness and positive user interactions. LLMs predisposed to provide beneficial or emotionally resonant outputs are particularly susceptible to attacks that leverage emotive language and favourable framing.

This research infers the need for more sophisticated safety mechanisms capable of discerning malicious intent embedded within seemingly innocuous narratives. Without improvements in contextual understanding and nuance, LLMs remain at risk of adversarial exploitation through subtle prompt engineering techniques like HEA.

Limitations:

The success of HEA is dependent on the specific LLM’s architecture and the robustness of its safety mechanisms, or additional security measures layered on top. The study focuses exclusively on text-based prompts, leaving its efficacy in multimodal LLMs unexplored.

Author’s Note: As LLMs are designed to be inherently helpful and accommodating, balancing their susceptibility to positive framing with maintaining their functionality remains a complex challenge. HEA highlights the inherent tension between usability and security, reminding us that adversaries will exploit even the smallest design biases.

[Research] [Academia] [Safety] Jan 21: OneShield - PII Detection in LLM Inputs and Outputs

This is a summary of research by Shubhi Asthana et al., titled "Deploying Privacy Guardrails for LLMs: A Comparative Analysis of Real-World Applications," released on January 24, 2025. The paper is accessible at: https://arxiv.org/pdf/2501.12456.

TLDR:

Asthana and colleagues present "OneShield Privacy Guard," a framework designed to mitigate privacy risks in user inputs and outputs of Large Language Models (LLMs). Through two real-world deployments, OneShield demonstrates high efficacy in detecting sensitive information across multiple languages and reducing manual privacy risk assessments.

How it Works

LLMs process vast amounts of unstructured data, often containing Personally Identifiable Information (PII) such as names, phone numbers, and credit card details. The ability of LLMs to unintentionally store and recall sensitive data during inference creates vulnerabilities that must be addressed through privacy-preserving mechanisms. Additionally, data sourced globally may violate region-specific privacy regulations, for example, the General Data Protection Regulation (GDPR) and the Personal Information Protection and Electronic Documents Act (PIPEDA).

OneShield differentiates itself by employing context-aware PII detection, which improves its ability to identify sensitive information within varied and complex data inputs. Unlike traditional rule-based methods, context-aware detection utilises algorithms to understand the context in which data appears, allowing for more accurate identification of PII.

The system architecture of OneShield comprises three core components:

Guardrail Solution: Monitors both input prompts and output responses to detect sensitive PII entities across multiple languages.

Detector Analysis Module: Incorporates detection mechanisms for various PII types, along with additional capabilities like hate speech, abuse, and profanity content detection.

Privacy Policy Manager: Provides policy templates tailored to jurisdictional regulations such as GDPR and the California Consumer Privacy Act (CCPA). It dynamically applies actions like masking, blocking, or passing input and output data based on detected entities, for compliance with relevant laws.

Implications:

In two deployment scenarios—one within a multinational corporation's customer service platform and another in a healthcare organisation's patient data management system—OneShield effectively identified and managed PII across multiple languages and data types. The result was a significant reduction in the manual false positive interventions required, and increased compliance with regional privacy regulations. The framework's context-aware detection capabilities offer a promising advancement in protecting sensitive information processed by LLMs.

Limitations:

While OneShield demonstrates high efficacy, its ability to detect sensitive information may vary across different domains and data structures. Detection models trained on structured enterprise data, for example, may not generalise well to conversational data in open-ended AI systems. Additionally, integrating OneShield into existing AI pipelines may require substantial initial effort and fine-tuning to align with company-specific privacy policies and compliance needs. Future advancements should focus on expanding privacy safeguards to handle multimodal data, including images, audio, and video, to address risks in cross-modal AI systems such as vision-language models.

Author’s Note: LLMs that prioritise user data security will be favoured by the public. Events such as DeepSeek's immediate jailbreaking and data breach, where a publicly accessible database exposed sensitive user data and chat histories, should act as a reminder to the industry that privacy and data security are tangible business goals, not merely regulatory obligations. When developing generative AI and gathering training data, information security must be considered from the start as part of the SecDevOps process, for both compliance and user trust.

[Research] [Academia] [Red-Teaming] Jan 24: Siren - LLMs Attacking LLMs

This is a summary of research by Zhao and Zhang, titled "Siren: A Learning-Based Multi-Turn Attack Framework for Simulating Real-World Human Jailbreak Behaviors," released on January 24, 2025. The paper is accessible at: https://arxiv.org/pdf/2501.14250.

TLDR:

Zhao and Zhang introduce "Siren," a novel framework designed to simulate realistic, multi-turn jailbreak attacks on Large Language Models (LLMs). Unlike traditional single-turn attacks, Siren employs a learning-based approach to mimic the dynamic strategies used by human adversaries, exposing vulnerabilities in LLMs' safety mechanisms over extended interactions.

How it Works:

In the context of LLMs, a single-turn attack involves crafting a single input or prompt to bypass safety measures, generating harmful or unintended responses. In contrast, a multi-turn attack involves a series of iterative prompts, where the adversary engages in a conversation, gradually steering the LLM toward unsafe outputs. These multi-turn attacks mimic real-world scenarios where adversaries adapt their strategies over time. Existing multi-turn attack methods rely on static patterns or predefined logical chains, lacking the adaptability of human attackers.

Siren addresses these limitations through a three-stage process:

Training Set Construction with Turn-Level LLM Feedback (Turn-MF):

Siren begins by generating a diverse set of adversarial queries. It then uses feedback from the target LLM at each turn to refine these queries, ensuring they effectively challenge the LLM's safety mechanisms.Post-Training Attackers with Supervised Fine-Tuning (SFT) and Direct Preference Optimization (DPO):

The framework fine-tunes the attacking LLM using supervised learning techniques, incorporating preferences derived from the target LLM's responses. This step improves the attacking LLM’s ability to adapt its strategies dynamically during multi-turn interactions.Interactions Between the Attacking and Target LLMs:

In the final stage, the trained attacking LLM engages in simulated conversations with the target LLM, employing its learned strategies to identify and exploit vulnerabilities over multiple turns.

Implications:

Siren represents a breakthrough in automating security testing for LLMs, as it accurately mimics the adaptability of real-world adversaries. This framework has the potential to scale vulnerability testing, making it feasible to evaluate AI systems on a broader scale than traditional manual testing approaches. Tthe same technique, however, could be co-opted by adversaries, allowing them to refine their attacks on LLMs more effectively.

Limitations:

While Siren demonstrates high attack success rates, its effectiveness is contingent on the quality and diversity of the training data used during fine-tuning. Additionally, the framework's reliance on feedback from the target LLM may limit its applicability against models that employ advanced evasion or detection strategies. Further research is necessary to evaluate Siren's performance across a broader range of LLM architectures and to develop countermeasures against such adaptive attack frameworks.

Author’s Note: At Mileva, we have observed a consistent pattern of adversarial actors replicating academic attacks shortly after their release. With Siren, we expect a similar trend, as its methodology offers a clear blueprint for attackers. AI security teams need to stay ahead by understanding emerging techniques like Siren and proactively implementing mitigations to protect their systems.

[News] Jan 30: Gmail Users Targeted in AI-Powered Social Engineering Attacks

This is a summary of a recent phishing attempt targeting Google account holders, as detailed by Zach Latta, founder of Hack Club. The attackers used Google's official g.co subdomain and impersonated Google Workspace support to deceive users into compromising their accounts. Latta's full account is available here: https://gist.github.com/zachlatta/f86317493654b550c689dc6509973aa4.

TLDR:

Security researcher and founder, Zach Latta received a call from an individual claiming to be "Chloe" from Google Workspace support, using the legitimate Google support number 650-203-0000. The caller informed him of a suspicious login attempt from Frankfurt, Germany, and offered to assist in securing his account. To verify authenticity, "Chloe" sent an email from the address `important@g.co`, an official Google subdomain, which passed all standard email authentication checks, including DKIM, SPF, and DMARC.

During the conversation, "Chloe" provided detailed instructions to navigate Google Workspace logs and suggested resetting sessions from Latta's devices. After the call dropped, another individual named "Solomon" called back, claiming to be a manager, and continued the deception. The attackers attempted to trick Latta into approving a sign-in request by sending a reset code and instructing him to confirm it, which would have granted them access to his account.

Down the line, Latta became suspicious due to inconsistencies in their statements and ultimately did not comply with their requests. He documented the experience in detail, including screenshots and a call recording, highlighting the advanced tactics used in this phishing attempt and warning the public to be cautious of similar approaches.

Author’s Note: AI-powered social engineering is the intuitive evolution of phishing techniques. Even though attacks like these are expected, they are still concerningly efficacious in action - especially when adversaries exploit legitimate platforms, communications channels, and security verification steps to establish credibility. As generative AI continues to blur the line between authentic and manipulated content, user vigilance alone will not be a sufficient defence. Modern email security will likely require AI-driven fingerprinting, or similar technologies to enhance existing phishing and spam detection capabilities, as adversaries leverage automation to scale and refine their attacks.

[Research] [Academia] [Cyber] Jan 29: VIRUS – Bypassing LLM Guardrails via Fine-Tuning Attacks

This is a summary of research by Zhang et al., titled "VIRUS: Harmful Fine-Tuning Attack for Large Language Models Bypassing Guardrail Moderation," released on January 29, 2025. The paper is accessible at: https://arxiv.org/pdf/2501.17433.

TLDR:

Zhang and colleagues introduce "VIRUS," a fine-tuning attack method designed to systematically bypass moderation guardrails in Large Language Models (LLMs). Unlike traditional jailbreak attacks that rely on adversarial prompting, VIRUS directly modifies the model’s internal knowledge through malicious fine-tuning, making safety interventions significantly harder to detect and mitigate. The researchers demonstrate that once a model undergoes harmful fine-tuning, it can consistently ignore ethical constraints, even when deployed with reinforcement learning from human feedback (RLHF) or safety alignment mechanisms.

How it Works:

LLMs rely on moderation guardrails—pre-trained safety constraints designed to prevent the model from generating harmful, unethical, or policy-violating content. These guardrails are typically enforced through reinforcement learning from human feedback (RLHF) and content moderation filters, which steer the model away from harmful outputs. However, these safety mechanisms can be bypassed when a model undergoes targeted fine-tuning.

VIRUS exploits this weakness by incrementally fine-tuning an LLM with harmful datasets, overriding safety constraints without requiring direct modification of the model’s architecture. The researchers outline a three-phase attack pipeline:

Harmful Dataset Construction: The attacker compiles a dataset containing adversarial examples designed to erode the model’s internal safety mechanisms. These examples are crafted to encourage the model to generate responses that would normally be blocked.

Fine-Tuning the Model: Using the constructed dataset, the attacker performs low-rank adaptation (LoRA) fine-tuning, a technique that allows small yet effective modifications to be applied to an LLM without requiring full retraining. This method makes VIRUS highly efficient and difficult to detect.

Evaluating Guardrail Evasion: The modified model is tested against standard safety-aligned LLMs. VIRUS demonstrates a 97.3% success rate in bypassing OpenAI’s moderation filters, as well as a 91.6% success rate against Google’s and Anthropic’s LLM safety systems. The fine-tuned model consistently produces responses that directly violate ethical and safety policies.

Unlike traditional prompt injection methods, which rely on user-crafted inputs to trick the model into violating its constraints, VIRUS rewires the model’s internal weights, making the attack far more persistent and difficult to mitigate.

Implications:

The findings from this study demonstrate that once a model has been fine-tuned on harmful data, even extensive reinforcement learning and content moderation mechanisms struggle to reverse the changes. This risk is already manifesting in maliciously modified open-source models being distributed and used for harmful purposes. Malicious actors have already been reverse-engineering, fine-tuning, and repackaging open-source models under deceptive names, distributing them as "clean" versions while embedding undetectable backdoors. Attackers will likely adopt and exploit this fine-tuning technique to create dual-purpose model; LLMs that appear safe under casual inspection but generate harmful content when prompted in specific ways, or to craft LLMs designed for fraud, misinformation, automated cybercrime, and even autonomous coordination of malicious activities.

Limitations:

While VIRUS effectively bypasses current safety mechanisms, its impact is somewhat constrained by a few technical factors. Fine-tuning an LLM requires significant computational resources, particularly for larger models, making it less accessible to low-resource attackers. However, with the increasing accessibility of cloud-based GPU services and decentralised computing networks, this barrier is rapidly decreasing. The effectiveness of VIRUS varies depending on the underlying model architecture and alignment strategy, meaning some LLMs may require additional adaptation before fine-tuning attacks can fully override their safety constraints. Additionally, while post-fine-tuning detection remains a major challenge, AI security researchers are actively working on watermarking, forensic analysis, and model fingerprinting techniques to identify models that have undergone adversarial fine-tuning.

VIRUS should highlight a shift in AI security concerns from "what will people do to users of my AI" to "what will people do with my AI". The rise of open-weight LLMs further amplifies these concerns, as attackers can freely modify and redistribute compromised versions under misleading names. This is already happening— manipulated models are being distributed on the dark web, and others are being hosted online for the public, often disguised as safer or more advanced versions of mainstream models while secretly incorporating harmful outputs. Users relying on non-original models online or self-hosting open-source LLMs should be aware of these risks and verify the integrity of the models they deploy.

For us, VIRUS also raises broader questions about the necessity and practicality of LLMs as we currently conceive them. Should LLMs be replaced with smaller, more targeted models (SLMs) that do not require extensive training data spanning all aspects of society and information? Much of the focus in AI security has been on restricting the outputs of LLMs, but a different approach might be to limit their capabilities at the training level—reducing or refining the scope of training data to prevent certain risks from ever materialising. This shift in perspective could challenge the way we currently think about AI safety and responsible model development. (Credit to arjenlentz on LinkedIn for providing a thoughtful conversation on the topic.)

Archive

[Research] [Academia] [Cyber] Jan 21: Adaptive Cyber-Attack Detection in IIoT

This is a summary of research by Afrah Gueriani et al., titled "Adaptive Cyber-Attack Detection in IIoT Using Attention-Based LSTM-CNN Models," released on January 21, 2025. The paper is accessible at: https://arxiv.org/pdf/2501.13962.

TLDR:

Gueriani and colleagues propose an advanced Intrusion Detection System (IDS) tailored for Industrial Internet of Things (IIoT) environments. Their model integrates Long Short-Term Memory (LSTM) networks, Convolutional Neural Networks (CNN), and attention mechanisms to effectively detect and classify cyber attacks. Evaluated on the Edge-IIoTset dataset, the model achieved near-perfect accuracy in binary classification and 99.04% accuracy in multi-class classification, demonstrating its efficacy in identifying various attack types.

How it Works:

The Industrial Internet of Things (IIoT) connects industrial devices, enabling real-time data exchange and analysis. While this connectivity enhances operational efficiency, it also introduces vulnerabilities to sophisticated cyber-attacks. Traditional security measures are often insufficient in addressing the dynamic nature of IIoT networks.

To tackle these challenges, Gueriani and colleagues developed an IDS that combines:

Long Short-Term Memory (LSTM) Networks: A type of Recurrent Neural Network (RNN) adept at capturing temporal dependencies in sequential data, making them suitable for analysing time-series data generated by IIoT devices.

Convolutional Neural Networks (CNN): These are proficient in identifying spatial patterns within data, aiding in the detection of anomalies in network traffic and sensor readings.

Attention Mechanism: This component enhances the model's focus on relevant features within the data, improving its ability to discern subtle indicators of cyber threats.

The model was trained and evaluated using the Edge-IIoTset dataset, which covers a variety of IIoT scenarios and associated cyber-attacks. To address class imbalance—a common issue where certain attack types are underrepresented—the Synthetic Minority Over-sampling Technique (SMOTE) was employed. SMOTE generates synthetic examples for minority classes, ensuring the model learns effectively across all attack types.

Implications:

The integration of LSTM, CNN, and attention mechanisms results in a hybrid model that leverages the strengths of each component. This architecture enables the IDS to effectively capture both temporal and spatial features of IIoT data, leading to improved detection and classification of cyber attacks. The application of SMOTE reduces biases by helping the model maintain high performance even in the presence of class imbalances.